Digital signal processing with Field Programmable Gate Arrays (FPGAs) for Accelerated AI

Digital Signal Processing with Field Programmable Gate Arrays (FPGA and Signal Processing) has gained relevance in the Artificial Intelligence (AI) domain and now have an advantage when compared to GPUs and ASICs.

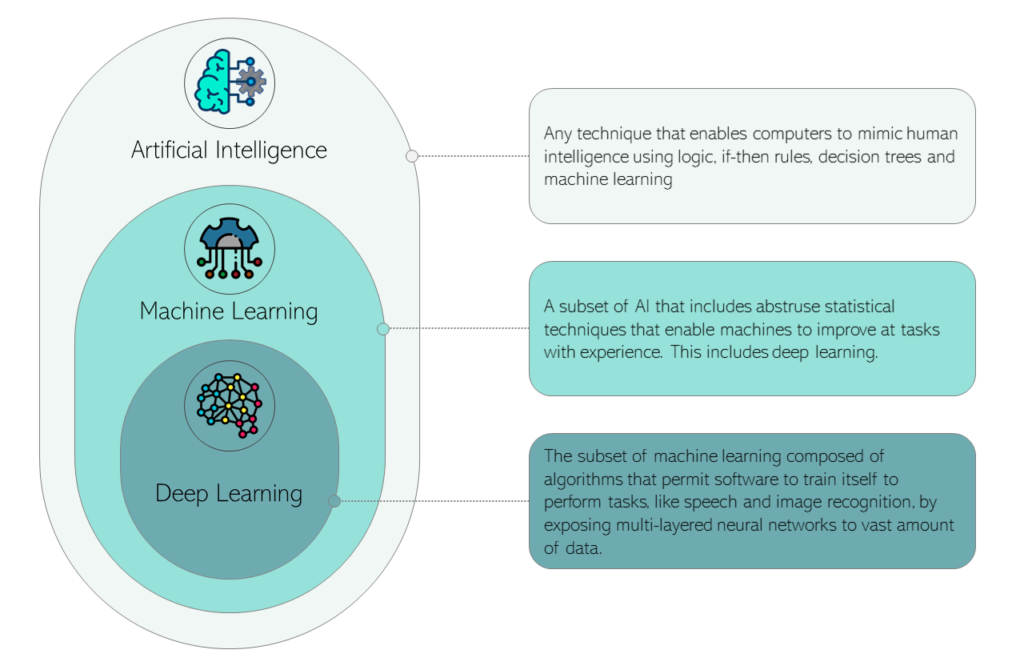

Artificial intelligence (AI) is heralding the next big wave of computing, changing how businesses operate and altering the way people engage in their daily lives. Artificial Intelligence (AI) and Machine Learning (ML) are often used interchangeably. Both these terms crop up quite frequently when topics such as Big Data, analytics and other broader waves of technological change sweeping through our world, are being talked about. Artificial Intelligence is a broad concept of machines that can carry out tasks in a smart and intelligent way, emulating humans. Machine learning is an application of artificial intelligence (AI) that enables these machines to automatically learn and improve from experience without being explicitly programmed. Machine learning focuses on development of programs that can access data and use it to learn on their own. Deep learning is a subset of machine learning. Deep learning usually refers to deep artificial neural networks, and sometimes to deep reinforcement learning. Deep artificial neural networks are algorithm sets that are extremely accurate, especially for problems related to image recognition, sound recognition, recommender systems, etc. Machine learning and deep learning use data to train models and build inference engines. These engines use trained models for data classification, identification, and detection. Low-latency solutions allow the inference engine to process data faster, increasing overall system response times for real-time processing. Vision and video processing is one of the areas where this will find application. With the rapid influx of video content on the internet over the past few years, there is immense need for methods to sort, classify, and identify visual content.

Artificial Intelligence is a broad concept of machines that can carry out tasks in a smart and intelligent way, emulating humans. Machine learning is an application of artificial intelligence (AI) that enables these machines to automatically learn and improve from experience without being explicitly programmed. Machine learning focuses on development of programs that can access data and use it to learn on their own. Deep learning is a subset of machine learning. Deep learning usually refers to deep artificial neural networks, and sometimes to deep reinforcement learning. Deep artificial neural networks are algorithm sets that are extremely accurate, especially for problems related to image recognition, sound recognition, recommender systems, etc. Machine learning and deep learning use data to train models and build inference engines. These engines use trained models for data classification, identification, and detection. Low-latency solutions allow the inference engine to process data faster, increasing overall system response times for real-time processing. Vision and video processing is one of the areas where this will find application. With the rapid influx of video content on the internet over the past few years, there is immense need for methods to sort, classify, and identify visual content.

Digital Signal Processing with Field Programmable Gate Arrays (FPGAs)

FPGAs are semiconductor devices that can be configured by the customer or designer after manufacturing. They consist of an array of programmable logic blocks, interconnects, and I/O blocks that can be configured to implement custom hardware functionality. The key advantage of FPGAs is their ability to execute multiple operations in parallel, which is particularly beneficial for computationally intensive DSP tasks. FPGA and signal processing has become a competitive alternative for high-performance DSP applications, previously dominated by general purpose DSPs and custom ASICs. Digital Signal Processing (DSP) using Field Programmable Gate Arrays (FPGAs) is a powerful combination that leverages the parallel processing capabilities of FPGAs to handle complex and high-speed signal processing tasks.

Cloud-based Machine Learning is currently dominated by Amazon, Google, Microsoft and IBM. The digital signal processing with field programmable gate arrays (FPGA and signal processing) algorithm libraries provided by them for various AI/ML functions are used by developers to build their custom intelligence for analytics or inference. Machine learning algorithms entail huge amount of data crunching or time critical decision-making which cannot be handled effectively by conventional CPU/Servers. Here arises the need for acceleration of these algorithms on a specific hardware; leading to the development of Custom AI chips (ASICs/SOC) or FPGA or GPU based AI platforms. This is where digital signal processing with field programmable gate arrays have gained relevance in the Artificial Intelligence domain and have an advantage when compared to GPUs and ASICs.

Latency

Digital signal processing with field programmable gate arrays (FPGA digital signal processing) offer lower latency than GPUs or CPUs. FPGAs and ASICs are faster than GPUs and CPUs as they run on bare meta environment, without an OS.

Power

Another area where FPGA and Digital Signal Processing with field programmable gate arrays out-perform GPUs (and CPUs) is in applications that have a constrained power envelope. It takes less power to run an app on a bare metal FPGA framework.

Flexibility: FPGAs vs ASICs

AI-ASICs have a typical production cycle time of 12 – 18 months. Changing a design on ASIC takes much longer, whereas a design change on an FPGA requires reprogramming that can take anywhere from few hours to weeks. However, digital signal processing with field programmable gate arrays are notoriously difficult to program but that’s at the benefit of reconfigurability and shorter cycle time.

Parallel Computing for Deep learning

Deep Neural Networks (DNNs) are all about completing repetitive math algorithms or functions on a massive scale at blazing speeds. When used in Digital signal processing with Field Programmable Gate Arrays, DNNs can implement parallel computing on a large scale. But parallel computing gives rise to execution complexities as programs running through one of pipelines must be accessed and coordinated across the multiple cores of the GPU. Computational imbalances can occur if irregular parallelisms evolve. Digital signal processing with field programmable gate arrays have an advantage over GPUs wherever custom data types exist, or irregular parallelisms tends to develop. FPGAs are also nearly at par with custom AI ASICs in some instances w.r.t parallel computing performance.

Recent Developments in FPGA based AI

Digital signal processing with field programmable gate arrays have been traditionally complicated to program, with a steeper learning curve than traditional programming. This has been the major bottleneck as far as offloading the algorithms to FPGA is concerned. However, leading FPGA makers have come forward with their offerings on AI accelerator HW platform and software development Suites which bridges the gap of migrating the conventional AI algorithms to an FPGA or FPGA-SOC specific implementation.

Xilinx ML Suite

The Xilinx ML Suite enables developers to optimize and deploy accelerated ML inference. It provides support for many common machine learning frameworks such as Caffe, MxNet and Tensorflow as well as Python and RESTful APIs. The Xilinx also has a generic inference processor, xDNN. xDNN processing engine, using Xilinx Alveo Data Center accelerator cards, is a high-performance energy-efficient DNN accelerator and out-performs many common CPU and GPU platforms today in raw performance and power efficiency for real-time inference workloads. The xDNN inference processor is a generic CNN engine that supports a wide variety of standard CNN networks. The xDNN engine integrates into popular ML frameworks such as Caffe, MxNet, and TensorFlow through the Xilinx xfDNN software stack.

https://www.xilinx.com/publications/solution-briefs/machine-learning-solution-brief.pdf

Intel AI toolkit

Intel® has released a development tool that allows effective execution of a neural network model from several deep learning training frameworks on any Intel AI HW engine, including FPGAs. Intel’s free Open Visual Inference & Neural Network Optimization (OpenVINO™) toolkit can convert and optimize a TensorFlow™, MXNet, or Caffe model for use with any Intel standard HW targets and accelerators. Developers can also execute the same DNN model across several Intel targets and accelerators (e.g., CPU, CPU with integrated graphics, Movidius, and Digital signal processing with Field Programmable Gate Arrays) by converting with OpenVINO, experimenting for the best fit in terms of cost and performance on the actual hardware.

Conclusion

Artificial Intelligence and Machine Learning are rapidly evolving technologies with an increasing demand for acceleration. With FPGAs from Xilinx and Intel supporting toolchains for AI (ML/DL) acceleration, the world is now looking into a future where digital signal processing with field programmable gate arrays (FPGA and Signal Processing) are going to be the sought-after option for implementing AI applications. Machine vision, Autonomous Driving, Driver Assist and Data center are among the applications that gain to benefit from the rapid deployment capabilities of any digital signal processing with field programmable gate arrays based AI systems.

By, Rajesh Chakkingal, Associate Vice President – VLSI