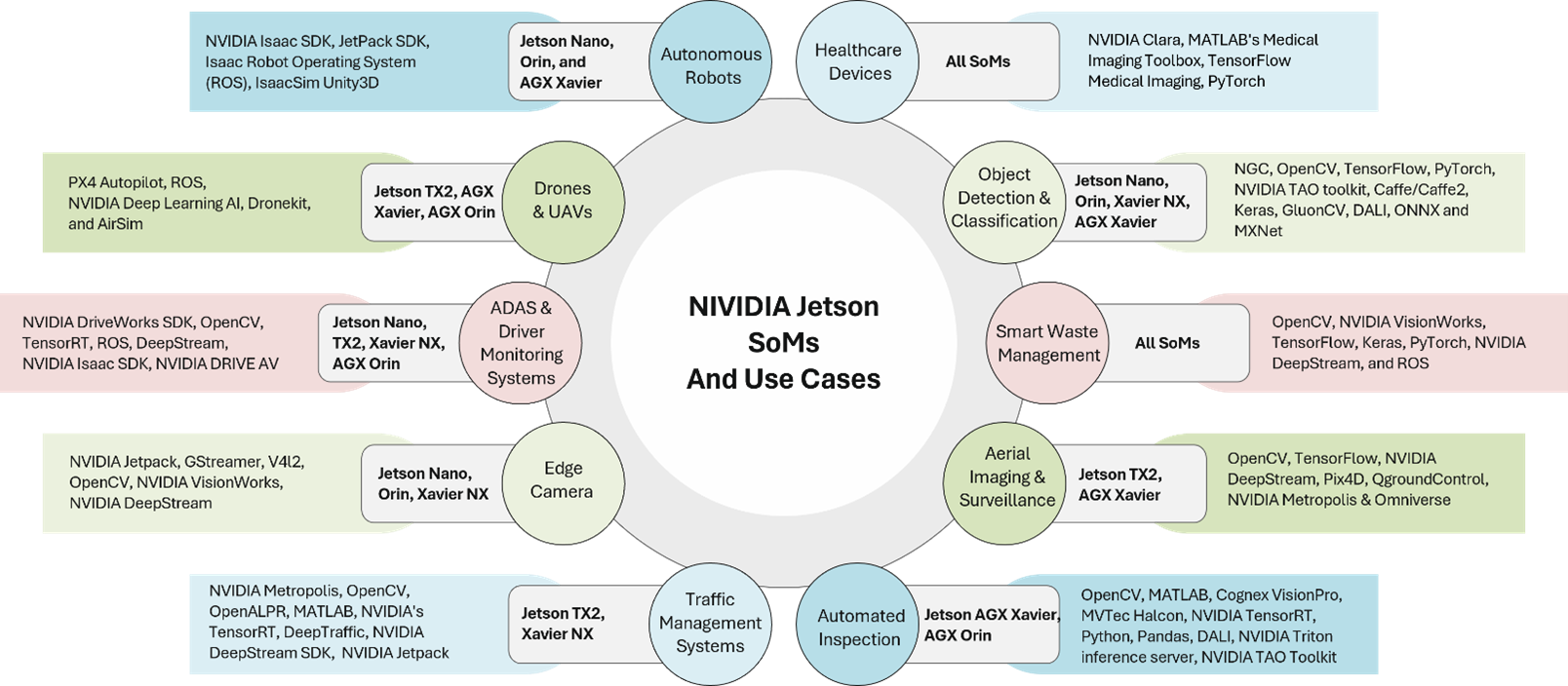

The first part of the Blog was focused on various NVIDIA Jetson System on Modules and their hardware capabilities. This blog will look at some of the popular use cases of these SoMs. All these use cases come with a perfect balance between power, performance, and energy efficiency which is fundamental to autonomous machines, edge computing and other compute-intensive applications.

Scroll down to know more about various use cases of Jetson Platforms and jumpstart your inventiveness with these powerful SoMs.

NVIDIA Jetson Use Cases

Autonomous Systems and Platforms

NVIDIA Jetson drives innovations in a range of domains, including Autonomous Robotics, Drones, ADAS, Healthcare, and more, offering high-speed interface support for diverse sensors. These SOMs facilitate real-time image analytics, realizing a wide range of applications. Software packages such as Isaac SDK, JetPack SDK, and ROS enhance the capabilities of Jetson SoMs for multi-modal autonomous applications. The diverse software ecosystem, including NVIDIA TensorRT, GStreamer, and OpenCV, complements Jetson SoMs’ high-performance computing and real-time capabilities across various industries.

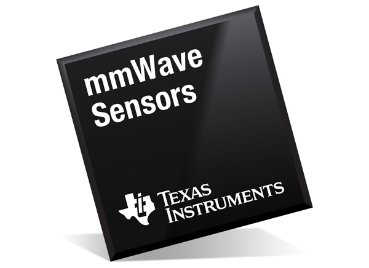

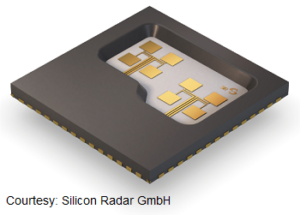

Autonomous Robots: NVIDIA Jetson is driving the new era of autonomous robotics, from security and healthcare to manufacturing, logistics, and agriculture. NVIDIA Jetson Nano, Jetson Orin, and AGX Xavier offer high-speed interface support for multiple sensors, including cameras, mmWave Radars, and IMU sensors, which enables real-time image analytics and inference at the edge. These SoMs are ideal for multi-modal autonomous robots, with advanced visual analytics that enable object detection & classification, motion planning, and pose estimation, which delivers the accuracy and fidelity to operate in factories, warehouses, and other industries. In addition, these SoMs help autonomous robots learn, adapt, and evolve using computer vision and machine learning.

When it comes to utilizing the full potential of NVIDIA Jetson SoMs for autonomous robots, there are several software options available. NVIDIA’s Isaac SDK is explicitly designed for robotics, offering a rich toolkit for developing robotic applications and making it an ideal choice for Jetson SoM users. On the other hand, NVIDIA’s JetPack SDK, tailored for Jetson SoMs, delivers comprehensive tools, libraries, and AI development frameworks. With GPU acceleration through CUDA support and deep learning model optimization using TensorRT, it stands as a robust choice for AI applications. Additionally, the widely adopted Robot Operating System (ROS) provides a flexible and modular framework for autonomous robot development. With native support for Jetson SoMs, ROS facilitates efficient sensor integration, control, and navigation capabilities, further enhancing their autonomous capabilities.

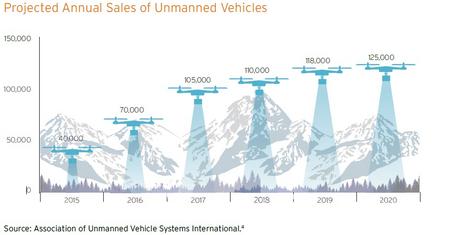

Drones & UAVs: UAVs and autonomous drones are becoming one of the most popular gadgets amongst commercial, emergency response, and military applications due to their low cost, adaptability, and capability to manoeuvre in harsh surroundings and weather conditions. UAVs use several sensors, redundancy programs to operate autonomously. The NVIDIA Jetson TX2 series is one of the fastest and most power-efficient embedded AI computing devices in the market and supports up to six cameras at 4K resolution, making it an ideal choice for battery-operated embedded systems like drones and robots. The SoM is capable of handling various functions like navigation, image processing and classification, alerts, speech recognition, data encryption and edge inference simultaneously.

In the field of Drones & UAVs powered by NVIDIA Jetson TX2, there are some great software options like PX4 Autopilot, ROS, NVIDIA Deep Learning AI, Dronekit, and AirSim. PX4 Autopilot delivers advanced flight control, while ROS offers a flexible framework which can be customized for various tasks. Deep Learning AI empowers machine learning tasks, and Dronekit facilitates custom software. AirSim provides a safe testing environment. Jetson TX2’s multi-camera support and AI capabilities make it the top choice. Together, these software options can be used to create autonomous drones that excel in navigation, image processing, speech recognition, and edge inference, ensuring safe and reliable operations.

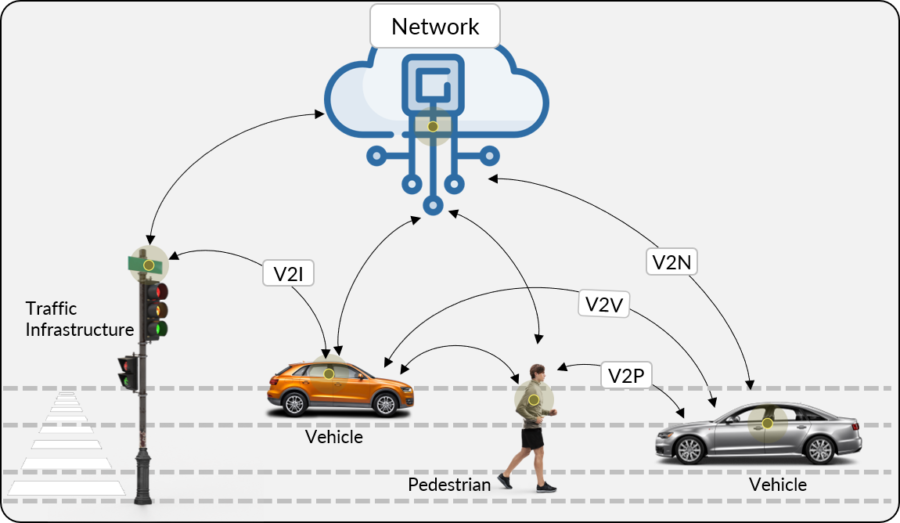

ADAS and Driver Monitoring Systems: The automotive industry is increasingly employing advanced technologies in the quest for safer roads and minimizing accidents. A semi/fully autonomous vehicle demands an enormous amount of sensory information analysis and real-time action with no margin for error. ADAS employs many algorithms with respect to forward collision warning, road sign detection, lane analysis and lane departure warnings, etc. To perform all these complex algorithms in real-time, there is a need of powerful compute engines. The NVIDIA Jetson SoMs like Nano, TX2, Xavier NX, AGX Xavier and AGX Orin, integrated with NVIDIA TensorRT, are ideal for designing collision, lane departure and speeding warning systems. The Jetson platforms are not only the worthiest GPU-enabled platforms for autonomous systems but also power-efficient and cost-effective.

The Jetson Series can provide up to 275 TOPS performance and comes with the best in the industry machine learning and computer vision technologies. They are capable of processing immense amount of data from multiple cameras and sensors that help to give accurate driving situations in real-time. These SoMs also help in deep head pose estimation through 2D or 3D depth data through a Convolutional Neural Network (CNN), real-time traffic road signs detection by using computer vision algorithms, and real-time prediction of automotive collision risk warnings and avoidance with the help of quick visual AI systems processing.

In the world of ADAS and Driver Monitoring Systems powered by NVIDIA Jetson SoMs, software solutions play a crucial role. NVIDIA’s DriveWorks SDK, OpenCV, TensorRT, ROS, DeepStream, and NVIDIA Isaac SDK lead the pack. DriveWorks offers a comprehensive toolkit for automotive systems, while OpenCV handles computer vision tasks. TensorRT optimizes deep learning models for real-time predictions. ROS provides a modular framework, and DeepStream is ideal for analysing data. For robotics based ADAS, NVIDIA Isaac SDK offers navigation and mapping. Jetson SoMs, from Nano to AGX Orin, bring computational power and ML support to the table. Together, these software enables complex algorithms, real-time understanding of the environment, and contribute to safer roads.

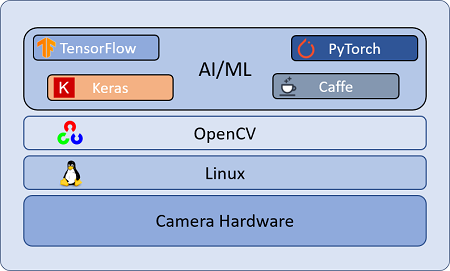

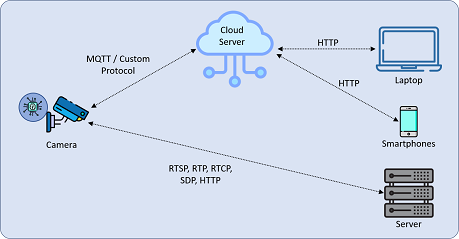

Edge Camera: NVIDIA Jetson Nano, Xavier NX, and TX2 series modules are good choices for building IoT-enabled embedded vision systems and edge cameras due to their high-performance and low-power computing capabilities. These modules are ideal for processing complex data at the edge and running advanced AI workloads. The NVIDIA Jetson modules come with powerful graphics processors and AI cores that provide no limits to the implementation of versatile AI-based edge computing systems.

For Edge Cameras on NVIDIA Jetson SoMs, diverse software solutions are available. GStreamer is ideal for multimedia tasks, OpenCV offers GPU-accelerated computer vision with the help of the graphics card, NVIDIA DeepStream powers real-time analytics, Python and TensorFlow enable AI, and ROS caters to robotics. Jetson’s powerful hardware supports real-time image processing, AI analytics, and IoT. Your choice depends on the requirements, but these options deliver versatile edge computing.

Healthcare Devices: High-resolution medical imaging and its analysis are critical elements of modern healthcare. The biggest challenges to developing advanced healthcare and medical systems are high-speed low-latency data transfer, real-time analysis of the massive data and inference of actionable insights. The NVIDIA Jetson Platforms address all these concerns and help accelerate the development of next-gen medical applications that aid advanced imaging, faster diagnosis, derive the best treatment procedures and enhance surgical processes. The multiple CSI Camera support, USB 3.2, GigE (up to 10GbE), and multi-mode DisplayPort among others, facilitate high-speed data transfer between various sensors, processor, and UI for superior operational efficiency. The Jetson SoMs support H.265 and H.264 video encoding and decoding (up to 8K/30 or 4K/60), ensuring low-latency video transmission and display. These SoMs offer end-to-end software framework, datasets, and domain-optimized tools for modular and scalable AI-based healthcare product deployment.

In the world of Healthcare Devices powered by NVIDIA Jetson SoMs, a range of software options are available, all geared up to tap into their high-performance computing and real-time abilities. NVIDIA Clara, designed for healthcare, provides imaging and AI tools. MATLAB’s Medical Imaging Toolbox, with the support of Jetson’s GPU, enhances tasks related to images. TensorFlow Medical Imaging offers ready-to-use models for medical applications that use AI. PyTorch brings deep learning flexibility for custom neural networks. NVIDIA’s Deep Learning Accelerated Libraries make AI processes even faster. Jetson SoMs, with their computational abilities and imaging support, are ideal fit for healthcare devices. The software choice depends on the device’s needs, paving the path for advanced medical systems.

Vision Analytics Applications

NVIDIA Jetson powers diverse Vision Analytics applications, with a focus on Object Detection & Classification. Jetson Nano supports TensorFlow and PyTorch, making it ideal for AI vision tasks and higher versions like Xavier NX and AGX Xavier enhance tracking capabilities. Jetson TX2, Xavier NX, and AGX Xavier with Metropolis, OpenALPR, DeepStream, Pix4D, and QGroundControl, excel in real-time object detection makes them ideal for applications such as Smart Waste Management, Intelligent Traffic Management Systems, Aerial Imaging, etc.

Object Detection & Classification: There is an exponential growth in object detection and classification computing due to the developments in artificial intelligence, connectivity, and sensor technologies. The NVIDIA Jetson Nano supports Machine Learning frameworks like TensorFlow, PyTorch, Caffe/Caffe2, Keras, MXNet, etc., making it an ideal platform for running AI vision tasks and AI-based computer vision applications. Jetson Nano is ideal for implementing applications such as Edge Camera, Warehouse Robots, Digital Signage, etc., demanding object detection and classification, segmentation, speech processing, image recognition, and object counting. If the application demands tracking and labelling of a detected object, developers can choose a higher version of the NVIDIA Jetson platform, like Xavier NX, Orin or AGX Xavier.

When it comes to object detection and classification on NVIDIA Jetson SoMs, two standout software options are TensorFlow and PyTorch. TensorFlow, a widely-used deep learning framework, offers robust support for these tasks. It simplifies the process with pre-trained models available through the TensorFlow Object Detection API, and it can be fine-tuned and optimized for Jetson GPUs to ensure excellent performance. On the other hand, PyTorch, another popular deep learning framework known for its flexibility and dynamic computation graph, provides pre-trained models and libraries tailored for object detection and classification. Jetson users also have the option of Jetson-specific builds, which maximize performance while harnessing PyTorch’s capabilities. These two software options provide powerful tools for addressing object detection and classification challenges on NVIDIA Jetson SoMs.

Intelligent Traffic Management: Robust, accurate, and secure traffic management systems are vital to reducing congestion and minimizing highway and city accidents. Intelligent traffic management systems help in real-time detection and classification of vehicle type, estimation of speed, queue length and vehicle flow, and traffic pattern analysis, among others. NVIDIA Jetson TX2 and Jetson Xavier NX platforms are ideal for real-time traffic management solutions because of their high computational power to fulfil specific neural network requirements, multiple 4K camera support, high-speed video encoding/decoding, real-time data communication with remote devices and the cloud network. These modules offer 10/100/1000 BASE-T Ethernet for low-latency sensor operations and power over Ethernet.

In the world of Intelligent Traffic Management, powered by NVIDIA Jetson SoMs, a versatile collection of software stands ready. NVIDIA Metropolis, an IoT platform that utilizes deep learning for real-time object prowess, while OpenALPR is ideal in license plate recognition and works fast on Jetson traffic systems because of its GPU-optimized. MATLAB is good for traffic simulation, image processing, and deep learning, especially when paired with Jetson’s powerful GPU support. NVIDIA’s TensorRT turbocharges deep learning models for traffic analysis. For controlled experimentation, DeepTraffic offers traffic simulation on Jetson SoMs. These software tools works well with Jetson’s strong computing abilities, camera support, real-time capabilities, and connectivity. All of these can help to develop intelligent traffic solutions tailored to fit specific needs.

Smart Waste Management: Waste is a surging problem for many industries. NVIDIA Jetson Modules are ideal for building smart waste management systems. The object detection and classification capability of Jetson modules helps make trash-versus-recycling decisions in an industrial environment. Equipped with high computing power, CSI Cameras and advanced image analytics powered by AI algorithms, Jetson modules can be used to build autonomous Waste Management Systems that can identify and sort infinite number of items. The AI algorithms aid the system in classifying and categorizing wastes and directing them to respective bins. Integration of digital weighing scales helps the system to easily measure, quantify and keep a detailed track of waste segregated.

In the field of Smart Waste Management, powered by NVIDIA Jetson SoMs, software options like OpenCV, TensorFlow, PyTorch, NVIDIA DeepStream, and ROS bring high-performance capabilities. OpenCV can detects and analyse waste items, TensorFlow can categorize, PyTorch can customize the waste tracking according to the needs, DeepStream accelerates waste detection, and ROS deals with waste management using robots. Jetson SoMs, with their strong computing power and support CSI Camera, are an ideal match for this task. These software tools work together to create autonomous waste management systems, boosting efficiency in identifying, categorizing, and managing waste items for improved industrial waste management.

Aerial Imaging and Surveillance: The aerial imaging and surveillance are finding several applications in domains such as Military, Survey, Forestry, Search and Rescue, Oil & Gas, Mining, Agriculture and Critical Infrastructure Protection among others. The NVIDIA Jetson Platforms are one of the finest sets of processing engines available in the market for building AI-powered Aerial Imaging solutions. High computing power, advanced graphics processing, deep-neural computing, and integration of modern AI workloads provide unparalleled image processing capabilities for these platforms. NVIDIA Jetson Tx2 and AGX Xavier are ideal for designing aerial imaging solutions that offer accelerated image processing, mapping, detection, segmentation, and tracking.

In the world of Aerial Imaging and Surveillance, where NVIDIA Jetson SoMs provide the power, there are multiple software options are available like OpenCV, TensorFlow, NVIDIA DeepStream, Pix4D, and QGroundControl. OpenCV is perfect for real-time object tracking, while TensorFlow offers tools and ready-made models for custom AI solutions. DeepStream makes it possible to process images in real-time for aerial surveillance, Pix4D can transforms images into 3D maps, and QGroundControl helps manage drone missions. All these software options work together to create efficient AI systems, which can revolutionize applications across military, agriculture, and infrastructure protection.

Automated Inspection: NVIDIA Jetson AGX Xavier can do miracles in the field of automated inspections and can cater to a wide range of industries and requirements. The support for multiple 4K resolution cameras, advanced machine-learning inference chores help automate inspection and identification of the smallest of defects or differences. With the help of machine-learning models, advanced AI computing, visualization, data analytics, and high-resolution simulation, Jetson AGX Xavier-powered automated inspection systems can manage routine and dangerous inspections with precision and speed.

Automated Inspection: NVIDIA Jetson AGX Xavier can do miracles in the field of automated inspections and can cater to a wide range of industries and requirements. The support for multiple 4K resolution cameras, advanced machine-learning inference chores help automate inspection and identification of the smallest of defects or differences. With the help of machine-learning models, advanced AI computing, visualization, data analytics, and high-resolution simulation, Jetson AGX Xavier-powered automated inspection systems can manage routine and dangerous inspections with precision and speed.

In the world of Automated Inspection with the help of NVIDIA Jetson AGX Xavier, there is a set of great software tools available. OpenCV, with its speedier processing using GPUs, spots defects in real-time. NVIDIA’s deep learning package helps train models to detect faults. MATLAB uses advanced inspection algorithms. Cognex VisionPro is super precise for manufacturing, and MVTec Halcon is ideal for industrial inspection. These software solutions can be assembled together to create efficient AI-powered inspection systems, revolutionizing quality control across industries.

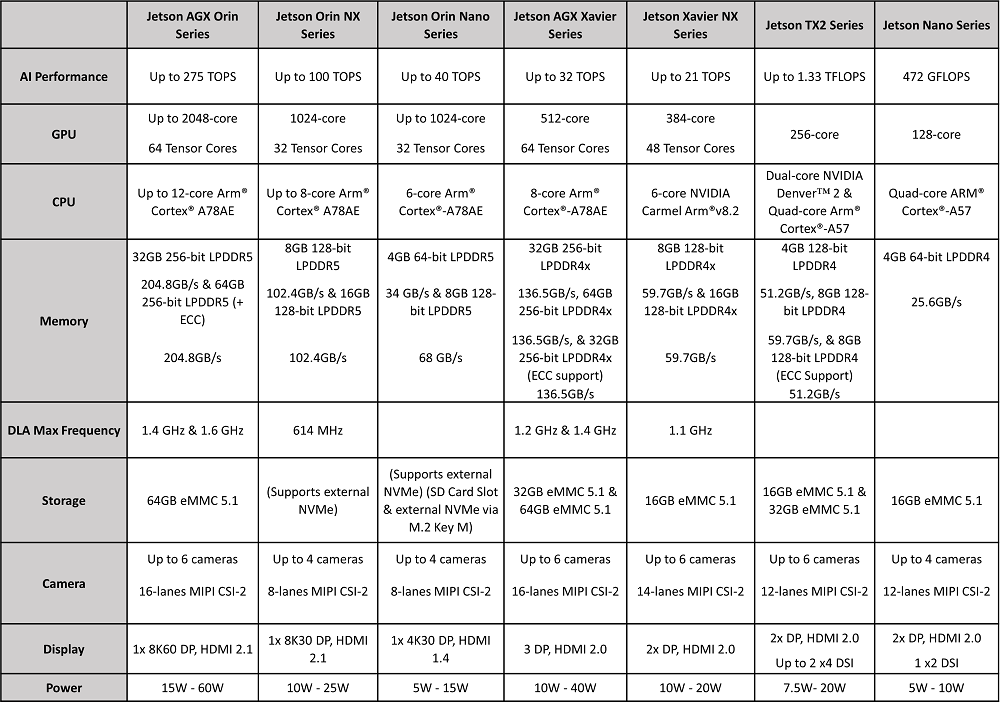

Table 1: NIVIDIA Jetson Applications

Conclusion

In a world of data-driven decision-making, the demand for AI-powered solutions will continue to grow and flourish. The NVIDIA Jetson Platforms’ flexibility, compact footprint, and high computing power offer endless possibilities to developers for building embedded systems and AI-powered devices. They offer power-efficient performance to the users to create software-defined autonomous machines.

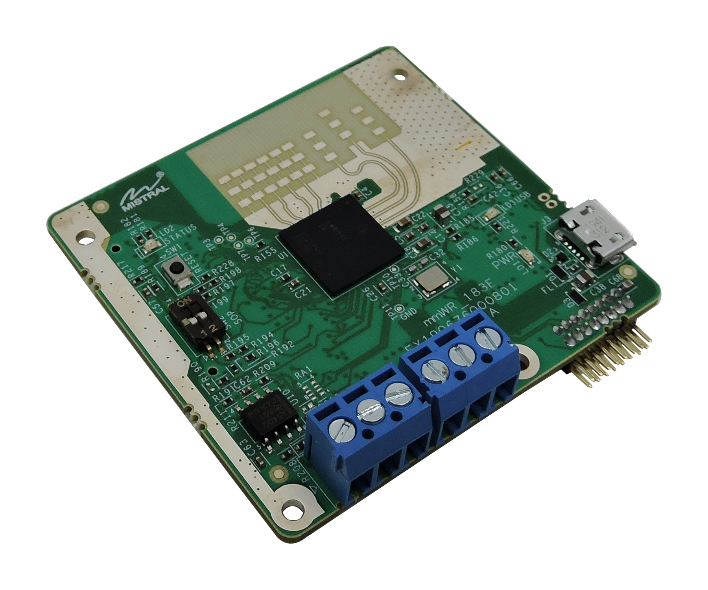

Mistral is a Member of the NVIDIA Partner Network, and our solutions facilitate accelerated product development, prototyping and reduce time to market. Our team has unparalleled expertise and design competency in offering custom Carrier Boards, Development Platforms and Application Boards based on Jetson System on Modules. Mistral also offers feature-rich, performance-oriented off-the-shelf Carrier Boards and AI-Sensor Fusion platforms compatible with the Jetson Nano, Jetson Xavier NX and Jetson T.X2 NX and Jetson Orin NX (Available Soon) SoMs. To know more, visit mistralsolutions.com/neuron.

Leading Aerospace and Defense OEMs are constantly striving to adopt

Leading Aerospace and Defense OEMs are constantly striving to adopt within the aerospace and defense sector. These efforts are aimed at enhancing the stealth characteristics of various military platforms, including aircraft, naval vessels, and ground vehicles. These advancements include the design of stealth airframes, radar-absorbing materials, and innovative sensor systems to reduce the detectability of vehicles and improve their survivability in modern combat scenarios. Additionally, research and development in stealth technology continue to be a focus, with ongoing efforts to refine and evolve these capabilities to maintain a competitive edge in the evolving landscape of aerospace and defense capabilities.

within the aerospace and defense sector. These efforts are aimed at enhancing the stealth characteristics of various military platforms, including aircraft, naval vessels, and ground vehicles. These advancements include the design of stealth airframes, radar-absorbing materials, and innovative sensor systems to reduce the detectability of vehicles and improve their survivability in modern combat scenarios. Additionally, research and development in stealth technology continue to be a focus, with ongoing efforts to refine and evolve these capabilities to maintain a competitive edge in the evolving landscape of aerospace and defense capabilities. Robotics and Unmanned Systems are rapidly advancing and becoming increasingly popular in

Robotics and Unmanned Systems are rapidly advancing and becoming increasingly popular in the realm of Robotics and Unmanned Systems. One initiative focuses on an electrically powered, fully automated, remotely operated vehicle for tasks like bomb disposal and handling hazardous materials. Another project involves a versatile unmanned ground vehicle capable of autonomous navigation using GPS waypoints and equipped with obstacle detection and avoidance capabilities for military applications, including mine clearing, surveillance, and operation in contaminated zones. Additionally, there is an effort dedicated to developing indigenous Unmanned Aerial Vehicles (UAVs) for surveillance and reconnaissance. Another initiative involves a versatile multi-mission UAV, capable of battlefield surveillance, reconnaissance, target tracking, localization, and artillery fire correction, launched from a mobile hydro-pneumatic launcher with day/night capabilities. These initiatives reflect India’s commitment to enhancing its defense capabilities through the integration of Robotics and Unmanned Systems, with a focus on improving operational efficiency and safety while maintaining a strategic advantage.

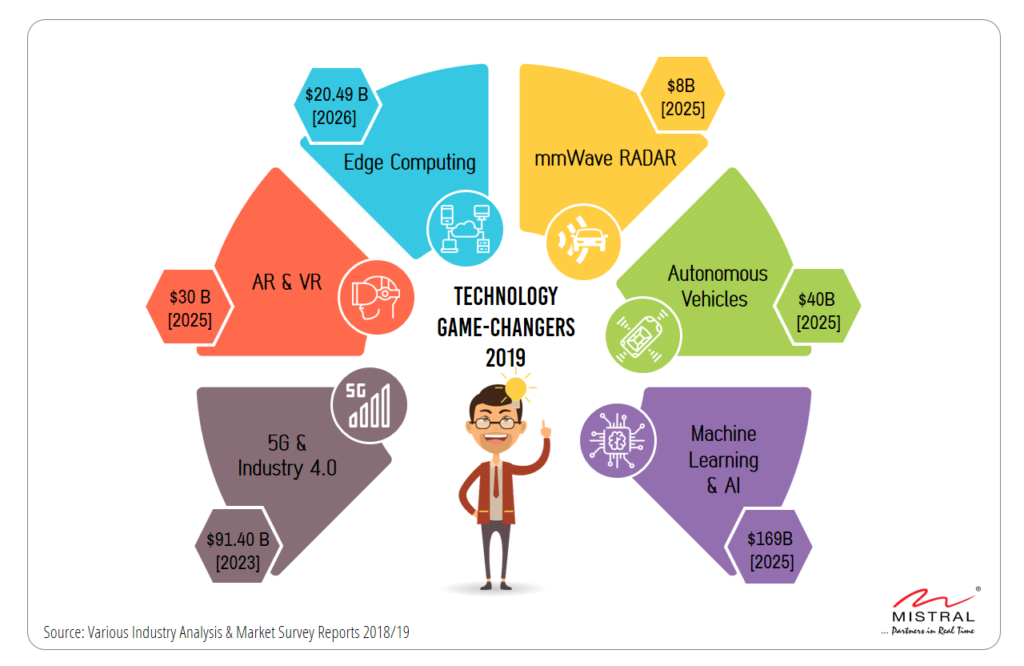

the realm of Robotics and Unmanned Systems. One initiative focuses on an electrically powered, fully automated, remotely operated vehicle for tasks like bomb disposal and handling hazardous materials. Another project involves a versatile unmanned ground vehicle capable of autonomous navigation using GPS waypoints and equipped with obstacle detection and avoidance capabilities for military applications, including mine clearing, surveillance, and operation in contaminated zones. Additionally, there is an effort dedicated to developing indigenous Unmanned Aerial Vehicles (UAVs) for surveillance and reconnaissance. Another initiative involves a versatile multi-mission UAV, capable of battlefield surveillance, reconnaissance, target tracking, localization, and artillery fire correction, launched from a mobile hydro-pneumatic launcher with day/night capabilities. These initiatives reflect India’s commitment to enhancing its defense capabilities through the integration of Robotics and Unmanned Systems, with a focus on improving operational efficiency and safety while maintaining a strategic advantage. making it more convenient, safe and efficient. These technologies are being applied to multiple applications in domains such as industrial, automotive, healthcare, smart city, smart buildings, and entertainment among others. The ever-evolving technology landscape and the increasing need for real-time data processing and inference at the edge is encouraging SoC/GPU manufacturers to build powerful edge computing devices that can run modern Deep Learning (DL) workloads. NVIDIA Jetson System on Modules (SoMs) – namely NVIDIA Jetson Nano, NVIDIA Jetson TX2 NX and NVIDIA Jetson Xavier NX are embedded AI computing platforms designed to accelerate edge AI product lifecycle. These high-performance, low-power compute engines are built to run complex computer vision, robotics, and other low-power applications. In addition, NVIDIA offers CUDA-X libraries, a set of highly optimized, GPU-accelerated libraries. CUDA-X offers a robust programming environment that works seamlessly with all Jetson SoMs, enabling customers to seamlessly use their application across various Jetson Platforms.

making it more convenient, safe and efficient. These technologies are being applied to multiple applications in domains such as industrial, automotive, healthcare, smart city, smart buildings, and entertainment among others. The ever-evolving technology landscape and the increasing need for real-time data processing and inference at the edge is encouraging SoC/GPU manufacturers to build powerful edge computing devices that can run modern Deep Learning (DL) workloads. NVIDIA Jetson System on Modules (SoMs) – namely NVIDIA Jetson Nano, NVIDIA Jetson TX2 NX and NVIDIA Jetson Xavier NX are embedded AI computing platforms designed to accelerate edge AI product lifecycle. These high-performance, low-power compute engines are built to run complex computer vision, robotics, and other low-power applications. In addition, NVIDIA offers CUDA-X libraries, a set of highly optimized, GPU-accelerated libraries. CUDA-X offers a robust programming environment that works seamlessly with all Jetson SoMs, enabling customers to seamlessly use their application across various Jetson Platforms. among all Jetson Modules. This SoM is one of the most powerful embedded processors currently in market, with up to 275 TOPs performance. The Jetson AGX Orin offers a giant leap forward in Edge AI and robotics. Jetson AGX Orin offers 8x performance over Jetson AGX Xavier with configurable power between 15W and 50W, making it ideal for autonomous machines and advanced robots. It will also help developers to deploy complex and large AI models to solve problems such as 3D perception, natural language understanding, and multi sensor fusion. This SoM supports multiple concurrent AI inference pipelines with onboard 64GB eMMC, 204 GB/s of memory bandwidth, and high-speed interface support for multiple sensors.

among all Jetson Modules. This SoM is one of the most powerful embedded processors currently in market, with up to 275 TOPs performance. The Jetson AGX Orin offers a giant leap forward in Edge AI and robotics. Jetson AGX Orin offers 8x performance over Jetson AGX Xavier with configurable power between 15W and 50W, making it ideal for autonomous machines and advanced robots. It will also help developers to deploy complex and large AI models to solve problems such as 3D perception, natural language understanding, and multi sensor fusion. This SoM supports multiple concurrent AI inference pipelines with onboard 64GB eMMC, 204 GB/s of memory bandwidth, and high-speed interface support for multiple sensors. The NVIDIA Jetson AGX Xavier is a power-efficient module designed for autonomous machines

The NVIDIA Jetson AGX Xavier is a power-efficient module designed for autonomous machines high-performance AI computing device to run AI applications, process data from high-resolution sensors and run multiple neural networks in parallel. This module is an ideal entry-level option to add advanced capabilities to embedded products and elementary solutions like intelligent gateways or entry-level NVRs. The Jetson Nano has an integrated 128-core Maxwell GPU, quad-core ARM A57 64-bit CPU, 4GB LPDDR4 memory, and support for MIPI CSI-2 and PCIe Gen2 high-speed I/O.

high-performance AI computing device to run AI applications, process data from high-resolution sensors and run multiple neural networks in parallel. This module is an ideal entry-level option to add advanced capabilities to embedded products and elementary solutions like intelligent gateways or entry-level NVRs. The Jetson Nano has an integrated 128-core Maxwell GPU, quad-core ARM A57 64-bit CPU, 4GB LPDDR4 memory, and support for MIPI CSI-2 and PCIe Gen2 high-speed I/O.

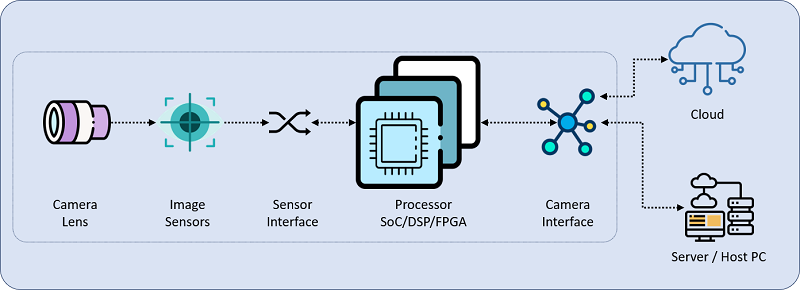

The advent of digital technologies and rapid development in Sensor technologies and embedded electronics, particularly the arrival of compute-intensive SOCs, high-speed DSPs, high-speed wireless / wired interfaces, video streaming protocols and cloud technologies among others enabled cameras to go beyond traditional surveillance and facilitate advanced

The advent of digital technologies and rapid development in Sensor technologies and embedded electronics, particularly the arrival of compute-intensive SOCs, high-speed DSPs, high-speed wireless / wired interfaces, video streaming protocols and cloud technologies among others enabled cameras to go beyond traditional surveillance and facilitate advanced The image sensor is the eye of a camera system which contains millions of discrete photodetector sites called pixels. The fundamental role of sensors is to convert light falling on the lens into electrical signals, which in turn will be converted to digital signals by the processor of the camera. Image sensors make a big impact when it comes to the performance of the camera due to various factors such as size, weight, power and cost. Choosing the right sensor is key to the design of a high-quality camera for surveillance applications. Selection of the sensor is influenced by several factors such as frame rates, resolution, pixel size, power consumption, quantum efficiency and FoV among others.

The image sensor is the eye of a camera system which contains millions of discrete photodetector sites called pixels. The fundamental role of sensors is to convert light falling on the lens into electrical signals, which in turn will be converted to digital signals by the processor of the camera. Image sensors make a big impact when it comes to the performance of the camera due to various factors such as size, weight, power and cost. Choosing the right sensor is key to the design of a high-quality camera for surveillance applications. Selection of the sensor is influenced by several factors such as frame rates, resolution, pixel size, power consumption, quantum efficiency and FoV among others.

Algorithms can be implemented at two layers in a high performance, real-time video streaming camera design. While the first layer specifically caters to image processing, the second layer of algorithms adds intelligence to the visuals captured. High-definition cameras capture images in high detail. However, the data captured may need further enhancements for effective analysis and accurate decision making. Implementation of visual algorithms, especially those aid in image correction and enhancement has become a minimal requirement for modern surveillance cameras. The commonly implemented algorithms include autofocus, auto exposure, histogram, color balancing, and focus bracketing among others. The second layer of algorithm is implemented on advanced surveillance cameras that leverage complex visual analytics powered by AI-algorithms to provide real-time situational awareness. These systems combine video capturing, processing, video analytics and real-time communication for effective situational awareness and decision making. These 4K Video Streaming cameras are finding increased applications in automated detection of fire, smoke, people counting, safe-zone monitoring, facial recognition, human pose estimation, scene recognition, etc.

Algorithms can be implemented at two layers in a high performance, real-time video streaming camera design. While the first layer specifically caters to image processing, the second layer of algorithms adds intelligence to the visuals captured. High-definition cameras capture images in high detail. However, the data captured may need further enhancements for effective analysis and accurate decision making. Implementation of visual algorithms, especially those aid in image correction and enhancement has become a minimal requirement for modern surveillance cameras. The commonly implemented algorithms include autofocus, auto exposure, histogram, color balancing, and focus bracketing among others. The second layer of algorithm is implemented on advanced surveillance cameras that leverage complex visual analytics powered by AI-algorithms to provide real-time situational awareness. These systems combine video capturing, processing, video analytics and real-time communication for effective situational awareness and decision making. These 4K Video Streaming cameras are finding increased applications in automated detection of fire, smoke, people counting, safe-zone monitoring, facial recognition, human pose estimation, scene recognition, etc.

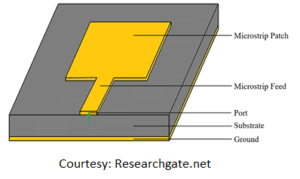

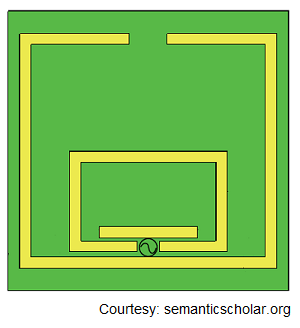

Microstrip patch antennas can be of various shapes (rectangular, circular, ring, triangle, quintuple, etc), designed to match specific characteristics of the application. They are commonly used in SDARS satellite communication, WLAN and Car-2-Car Communication, GPS systems. These antennas are unobtrusively flat and can be easily employed in a vehicle’s structure, typically behind a fender or bumper.

Microstrip patch antennas can be of various shapes (rectangular, circular, ring, triangle, quintuple, etc), designed to match specific characteristics of the application. They are commonly used in SDARS satellite communication, WLAN and Car-2-Car Communication, GPS systems. These antennas are unobtrusively flat and can be easily employed in a vehicle’s structure, typically behind a fender or bumper. Chip Antennas are compact, low-profile and offers high performance and reliability. They are designed for easy integration into wireless communication systems. In an automotive environment, Chip Antennas of various bandwidth is employed to establish in-vehicle and vehicle-to-infrastructure connectivity. Antennas for BLUETOOTH, WLAN, Cellular, GNSS, DSRC, SDARs, etc. are now coming in Chip, making it more compact and efficient.

Chip Antennas are compact, low-profile and offers high performance and reliability. They are designed for easy integration into wireless communication systems. In an automotive environment, Chip Antennas of various bandwidth is employed to establish in-vehicle and vehicle-to-infrastructure connectivity. Antennas for BLUETOOTH, WLAN, Cellular, GNSS, DSRC, SDARs, etc. are now coming in Chip, making it more compact and efficient.

Some of the popular wireless technologies used in IoT applications are Wi-Fi, Bluetooth, WLAN and ZigBee, which operate in the frequency band of 2.4GHz to 5GHz. These wireless standards are capable of handling high data rates over short distances. Wireless standards such as LoRa (operates in RF bands of 169 MHz to 915MHz) and SigFox (operate in RF bands of 868MHz to 928MHz) are used in applications that need relatively longer range, and at much lower data rates.

Some of the popular wireless technologies used in IoT applications are Wi-Fi, Bluetooth, WLAN and ZigBee, which operate in the frequency band of 2.4GHz to 5GHz. These wireless standards are capable of handling high data rates over short distances. Wireless standards such as LoRa (operates in RF bands of 169 MHz to 915MHz) and SigFox (operate in RF bands of 868MHz to 928MHz) are used in applications that need relatively longer range, and at much lower data rates. Chip antennas are compact and have relatively low bandwidth. They perform better with large ground planes, which may add to challenges while integrating a board of high component density. Chip Antennas have a limited range, making them optimal for small IoT devices that use low-frequency bands such as, computers, satellite radios, GPS devices, etc.

Chip antennas are compact and have relatively low bandwidth. They perform better with large ground planes, which may add to challenges while integrating a board of high component density. Chip Antennas have a limited range, making them optimal for small IoT devices that use low-frequency bands such as, computers, satellite radios, GPS devices, etc. Wire antennas are more economical as compared with othertypes of IoT antenna such as Chip and Whip. The size of wire antenna is inversely proportional to its frequency, i.e., the size of the antenna increases as frequency decreases, which may invite challenges in designs. Wire antennas are either fixed to the PCB over a ground plane or connected over a coaxial cable offering good RF performance. These Antennas are available in various patterns and shapes such as Dipole, Loop and Helix and are commonly used in connected cars, smart buildings solutions, etc.

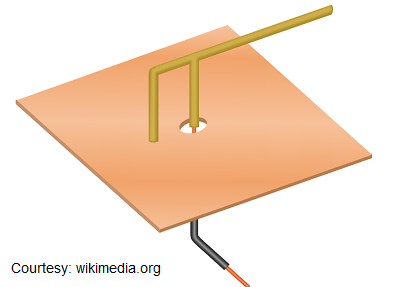

Wire antennas are more economical as compared with othertypes of IoT antenna such as Chip and Whip. The size of wire antenna is inversely proportional to its frequency, i.e., the size of the antenna increases as frequency decreases, which may invite challenges in designs. Wire antennas are either fixed to the PCB over a ground plane or connected over a coaxial cable offering good RF performance. These Antennas are available in various patterns and shapes such as Dipole, Loop and Helix and are commonly used in connected cars, smart buildings solutions, etc. Whip antennas are one of the best performing IoT antenna and probably the priciest among commonly employed antennas. They are usually positioned outside the device enclosure, making a physical connection with the PCB over a coaxial connector. Whip antenna is a common type of monopole antenna, which is ideal for wireless connectivity in ISM, LoRa, LPWAN based applications. The whip antennas are ideal for designs that use multiple transceivers such as hand-held radios, routers, gateways walkie-talkies, Wi-Fi enabled devices, vehicles, etc.

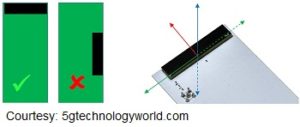

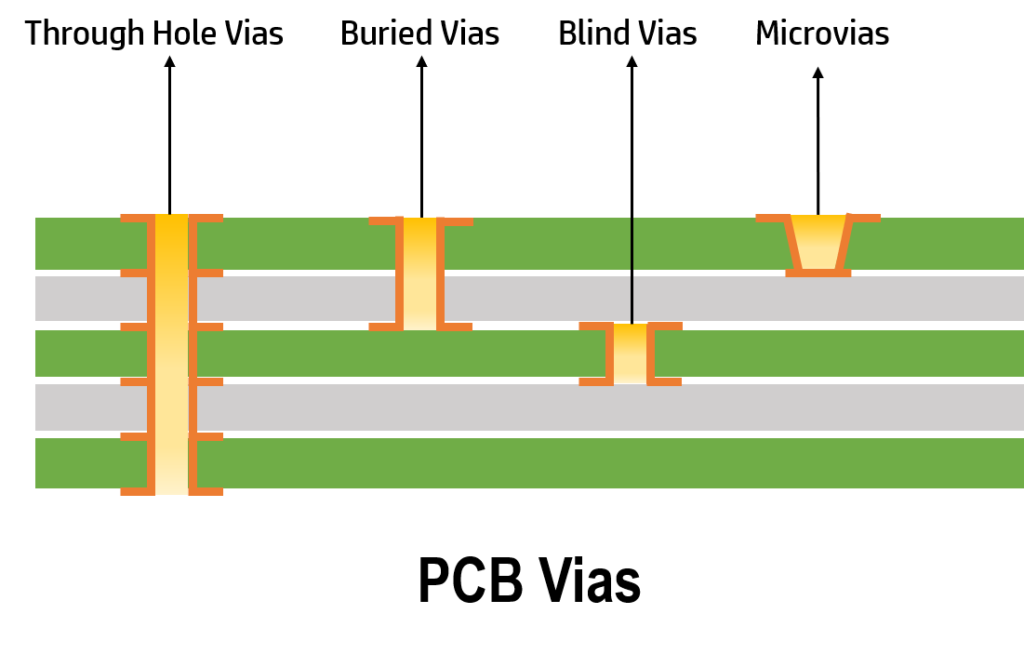

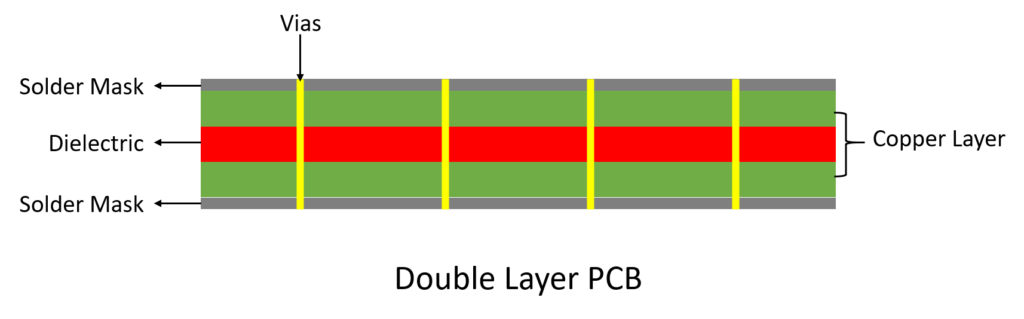

Whip antennas are one of the best performing IoT antenna and probably the priciest among commonly employed antennas. They are usually positioned outside the device enclosure, making a physical connection with the PCB over a coaxial connector. Whip antenna is a common type of monopole antenna, which is ideal for wireless connectivity in ISM, LoRa, LPWAN based applications. The whip antennas are ideal for designs that use multiple transceivers such as hand-held radios, routers, gateways walkie-talkies, Wi-Fi enabled devices, vehicles, etc. As the name indicates, Antenna on PCB (AoPCB) is the antenna or antenna pattern embedded on the PCB using modern fabrication technologies – typically copper traces on circuit boards. PCB antennas are cost-effective and offer great flexibility in designs as developers can incorporate the antenna design at an elementary level. One drawback of Antenna on PCB is that it uses space on the circuit board, which may bring in significant challenges in an ultra-compact or complex design with large number of sensors and components. These antennas are ideal for USB dongles, automotive and robotics applications.

As the name indicates, Antenna on PCB (AoPCB) is the antenna or antenna pattern embedded on the PCB using modern fabrication technologies – typically copper traces on circuit boards. PCB antennas are cost-effective and offer great flexibility in designs as developers can incorporate the antenna design at an elementary level. One drawback of Antenna on PCB is that it uses space on the circuit board, which may bring in significant challenges in an ultra-compact or complex design with large number of sensors and components. These antennas are ideal for USB dongles, automotive and robotics applications. Place antenna in a corner of the PCB to ensure adequate keep out area for the antenna on the PCB

Place antenna in a corner of the PCB to ensure adequate keep out area for the antenna on the PCB

growth potential in the automotive industry. With the advancements in printed circuit boards, the HDI PCB technology market looks promising with opportunities in various industries such as IT & Telecommunications, industrial electronics, and consumer electronics.

growth potential in the automotive industry. With the advancements in printed circuit boards, the HDI PCB technology market looks promising with opportunities in various industries such as IT & Telecommunications, industrial electronics, and consumer electronics.

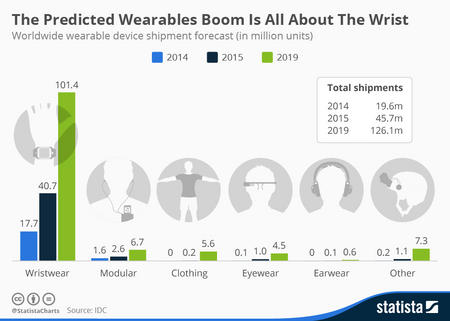

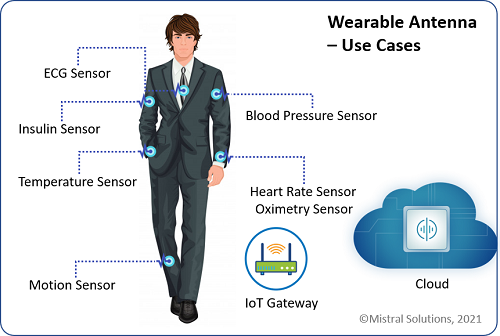

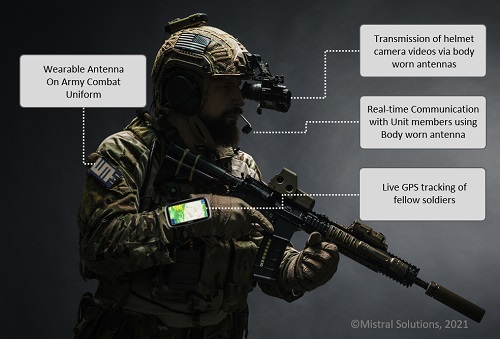

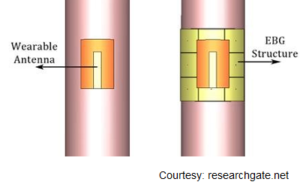

The advent of high efficiency miniature antennas is greatly enabling invasive/non-invasive devices in consumer, healthcare and several military applications. A few examples of consumer-bound wearable devices that use wearable antennas are smartwatches (integrated Bluetooth Antennas), smart glasses (integrated Wi-Fi, GPS and IR Antennas), Body worn action cameras (Wi-Fi and Bluetooth), and small sensor devices in sports shoes (Wi-Fi / Bluetooth) that can be paired with smartphones.

The advent of high efficiency miniature antennas is greatly enabling invasive/non-invasive devices in consumer, healthcare and several military applications. A few examples of consumer-bound wearable devices that use wearable antennas are smartwatches (integrated Bluetooth Antennas), smart glasses (integrated Wi-Fi, GPS and IR Antennas), Body worn action cameras (Wi-Fi and Bluetooth), and small sensor devices in sports shoes (Wi-Fi / Bluetooth) that can be paired with smartphones. A WBAN device ensures continuous health monitoring of an elderly person or a patient without hindering his day-to-day activities. The implantable wearable antenna sensors are also used for several biomedical applications such as heart pacemakers, cochlear implants and intraocular implants among others. In military, wearable antennas find several applications such as soldier’s live-location tracking, real-time transmission of image and video for instant decentralized communications, etc. These antennas are also used for access / identity management, navigation, RFID applications, etc.

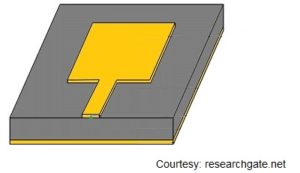

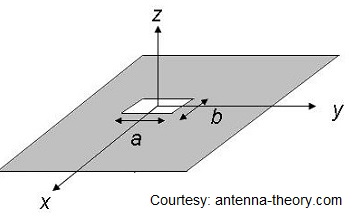

A WBAN device ensures continuous health monitoring of an elderly person or a patient without hindering his day-to-day activities. The implantable wearable antenna sensors are also used for several biomedical applications such as heart pacemakers, cochlear implants and intraocular implants among others. In military, wearable antennas find several applications such as soldier’s live-location tracking, real-time transmission of image and video for instant decentralized communications, etc. These antennas are also used for access / identity management, navigation, RFID applications, etc. Microstrip antennas are metallic strip or patch mounted on a substrate. Microstrip antennas are simple and inexpensive to design and manufacture due to its 2-dimensional structure. They are easy to fabricate using modern printed circuit technology. Microstrip antennas are of low profile and conformable to planar & non-planar surfaces. These antennas allow linear & circular polarization. These antennas can be easily mounted on rigid surfaces and are available in several forms such as rectangle, square, circle, triangular, elliptical patterns. Most GPS devices use a Microstrip / patch Antenna.

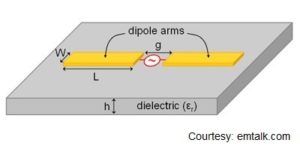

Microstrip antennas are metallic strip or patch mounted on a substrate. Microstrip antennas are simple and inexpensive to design and manufacture due to its 2-dimensional structure. They are easy to fabricate using modern printed circuit technology. Microstrip antennas are of low profile and conformable to planar & non-planar surfaces. These antennas allow linear & circular polarization. These antennas can be easily mounted on rigid surfaces and are available in several forms such as rectangle, square, circle, triangular, elliptical patterns. Most GPS devices use a Microstrip / patch Antenna. Printed Dipole Antennas are popular due to its low profile, ease of fabrication, low-cost, polarisation purity and wide frequency band coverage. Other major advantages of this antenna are its structure (two arms printed on two sides of a dielectric substrate), large bandwidth and the single-ended microstrip input. Dipole Antennas are relatively large in size, which makes it a little complex to implement in applications with space restrictions. In addition, the degradation of omnidirectional radiation patterns and the likely need of a balun may pose challenges in small form-factor designs. Printed Dipole Antennas are widely used in wireless communication and mmWave applications.

Printed Dipole Antennas are popular due to its low profile, ease of fabrication, low-cost, polarisation purity and wide frequency band coverage. Other major advantages of this antenna are its structure (two arms printed on two sides of a dielectric substrate), large bandwidth and the single-ended microstrip input. Dipole Antennas are relatively large in size, which makes it a little complex to implement in applications with space restrictions. In addition, the degradation of omnidirectional radiation patterns and the likely need of a balun may pose challenges in small form-factor designs. Printed Dipole Antennas are widely used in wireless communication and mmWave applications. Monopole Antennas are half the size of dipole antenna and are mostly mounted above a ground plane. Due to its relatively smaller size, Monopole Antennas are ideal for applications where a smaller antenna design is required. Monopole Antennas exhibit good radiation performance if they are placed over High Impedance Surfaces (HIS). Monopole antennas are of low-profile, low-cost, and easy to fabricate, which meets the basic requirements for wearable antennas. The simple, lightweight structure of Monopole Antennas make them ideal to integrate into clothes.

Monopole Antennas are half the size of dipole antenna and are mostly mounted above a ground plane. Due to its relatively smaller size, Monopole Antennas are ideal for applications where a smaller antenna design is required. Monopole Antennas exhibit good radiation performance if they are placed over High Impedance Surfaces (HIS). Monopole antennas are of low-profile, low-cost, and easy to fabricate, which meets the basic requirements for wearable antennas. The simple, lightweight structure of Monopole Antennas make them ideal to integrate into clothes. The Printed Loop Antenna is made of single or multiple loops, in the shape of a circle, square or any other closed geometric shape. The Loop Antenna has a dimension less than a wavelength, which ensures the current throughout the loop remains in phase. These antennas are light in weight and has a simple, compact structure. The Loop Antennas have relatively poor efficiency (very low value of radiation resistance), which results in power loss in the form of heat due to the flow of high current. Two distinct Loop Antennas are available – Large Loop Antennas and Small Loop Antennas. The Large Loop Antennas are used for both transmission and reception whereas the Small Loop Antennas are majorly used for reception. These antennas are ideal for small radio devices, and body worn communication systems suitable for military applications.

The Printed Loop Antenna is made of single or multiple loops, in the shape of a circle, square or any other closed geometric shape. The Loop Antenna has a dimension less than a wavelength, which ensures the current throughout the loop remains in phase. These antennas are light in weight and has a simple, compact structure. The Loop Antennas have relatively poor efficiency (very low value of radiation resistance), which results in power loss in the form of heat due to the flow of high current. Two distinct Loop Antennas are available – Large Loop Antennas and Small Loop Antennas. The Large Loop Antennas are used for both transmission and reception whereas the Small Loop Antennas are majorly used for reception. These antennas are ideal for small radio devices, and body worn communication systems suitable for military applications. The Slot Antenna consists of a flat metal surface with fine narrow slots. The Slot Antennas are very versatile and are used typically at frequencies between 300 MHz and 24 GHz. This antenna has omnidirectional radiation patterns and a linear polarization. The slot size (length and width), shape and material characteristics, determine the operating characteristics of the antenna. The simple structure and flexible nature make it suitable for small form-factor wearable applications. Since the antenna can be easily implemented on flexible surfaces like denim, it is ideal for medical and military applications. This antenna provides effective wireless data transmission even when the human posture is changed.

The Slot Antenna consists of a flat metal surface with fine narrow slots. The Slot Antennas are very versatile and are used typically at frequencies between 300 MHz and 24 GHz. This antenna has omnidirectional radiation patterns and a linear polarization. The slot size (length and width), shape and material characteristics, determine the operating characteristics of the antenna. The simple structure and flexible nature make it suitable for small form-factor wearable applications. Since the antenna can be easily implemented on flexible surfaces like denim, it is ideal for medical and military applications. This antenna provides effective wireless data transmission even when the human posture is changed. The Planar Inverted-F antenna (PIFA) finds majority of applications in portable smart devices. These antennas resemble an inverted ‘F’, as the names indicates. The low profile and omnidirectional pattern make the antenna popular among wearable product developers. PIFAs can also be printed like microstrips, a technology that allows antennas to be printed on the substrate or circuit board. The Planar Inverted-F Antennas have compact size, dual-band functionality, and very good on-body results (good SAR values), making it suitable for body worn electronics devices.

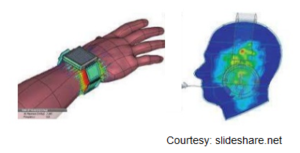

The Planar Inverted-F antenna (PIFA) finds majority of applications in portable smart devices. These antennas resemble an inverted ‘F’, as the names indicates. The low profile and omnidirectional pattern make the antenna popular among wearable product developers. PIFAs can also be printed like microstrips, a technology that allows antennas to be printed on the substrate or circuit board. The Planar Inverted-F Antennas have compact size, dual-band functionality, and very good on-body results (good SAR values), making it suitable for body worn electronics devices. The Federal Communication Commission (FCC) introduced Specific Absorption Rate (SAR) limits for wireless devices to ensure acceptable radiations level in human body. The SAR limit is set to 1.6 W/kg averaged over 1g of actual tissue, while the limit is set to 2W/kg averaged over 10g of actual tissue by the Council of European Union. SAR is a parameter that is used to measure the rate at which RF (radiofrequency) energy is absorbed by human tissues. SAR values ensure that any wearable device or wireless smart gadget does not exceed the maximum permissible exposure levels.

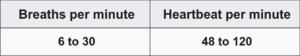

The Federal Communication Commission (FCC) introduced Specific Absorption Rate (SAR) limits for wireless devices to ensure acceptable radiations level in human body. The SAR limit is set to 1.6 W/kg averaged over 1g of actual tissue, while the limit is set to 2W/kg averaged over 10g of actual tissue by the Council of European Union. SAR is a parameter that is used to measure the rate at which RF (radiofrequency) energy is absorbed by human tissues. SAR values ensure that any wearable device or wireless smart gadget does not exceed the maximum permissible exposure levels. Table 1: Vitals of a Healthy Person

Table 1: Vitals of a Healthy Person Table 2: Age-wise Resting Heart Rate

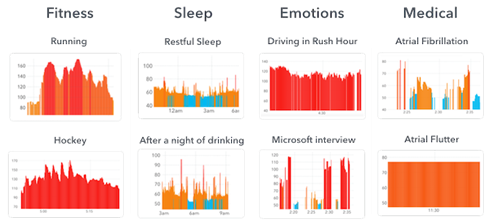

Table 2: Age-wise Resting Heart Rate Figure 1: Variation of Heart Rate based on individual’s fitness, stress and medical states

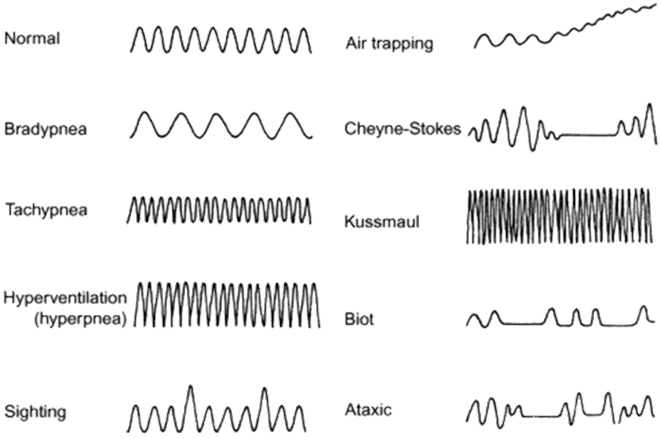

Figure 1: Variation of Heart Rate based on individual’s fitness, stress and medical states Figure 2: Breath Pattern

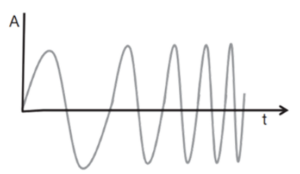

Figure 2: Breath Pattern Figure 3: Chirp in time domain

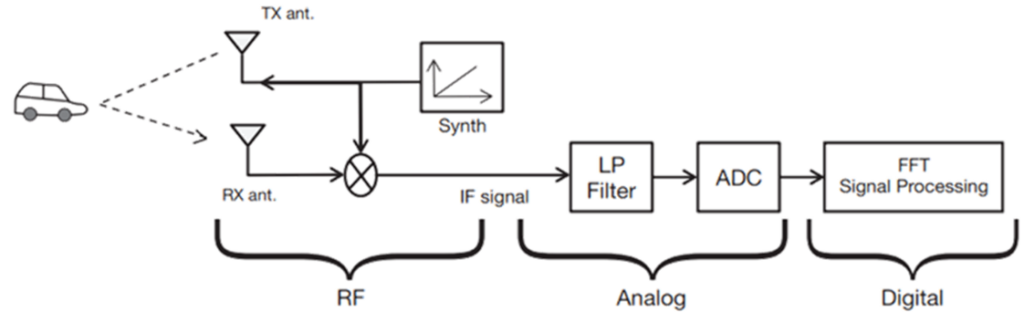

Figure 3: Chirp in time domain Figure 4: FMCW Radar Block diagram (Source: TI.com)

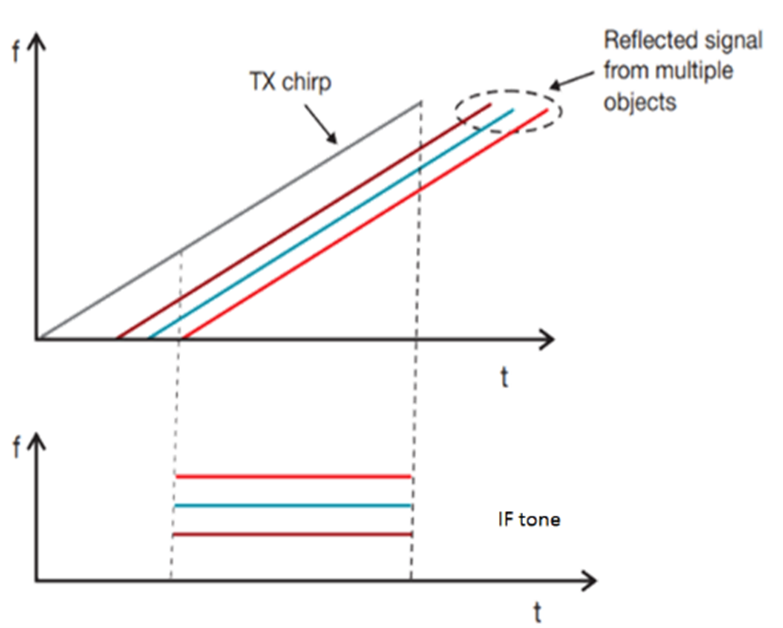

Figure 4: FMCW Radar Block diagram (Source: TI.com) Figure 5: Frequency domain representation of TX and Rx Chirps and the IF frequency tones (Source: TI.com)

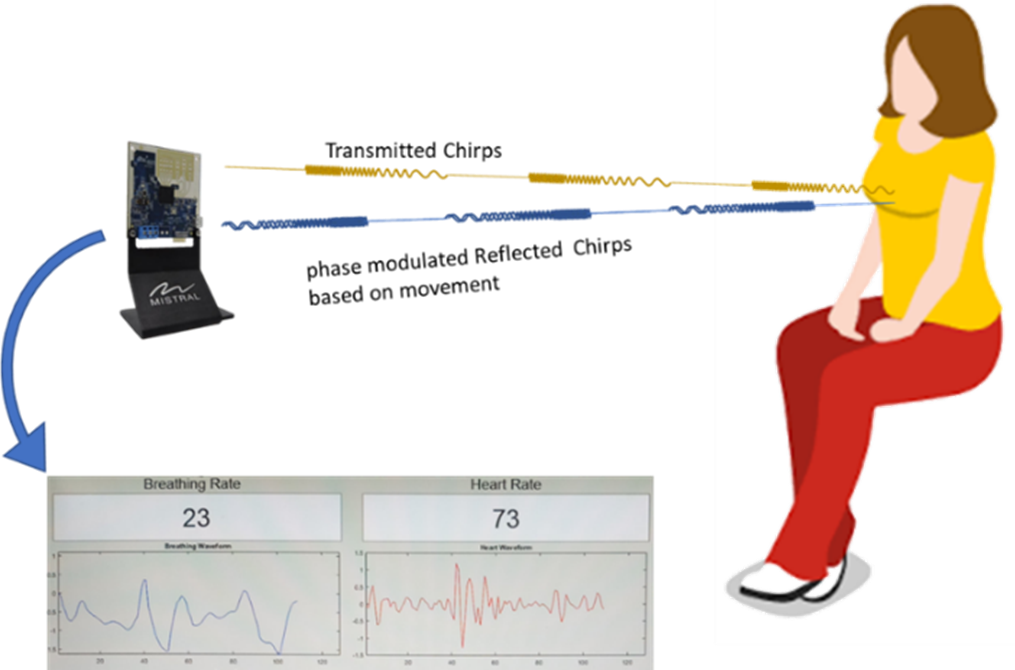

Figure 5: Frequency domain representation of TX and Rx Chirps and the IF frequency tones (Source: TI.com) Figure 6: HR and BR detection setup

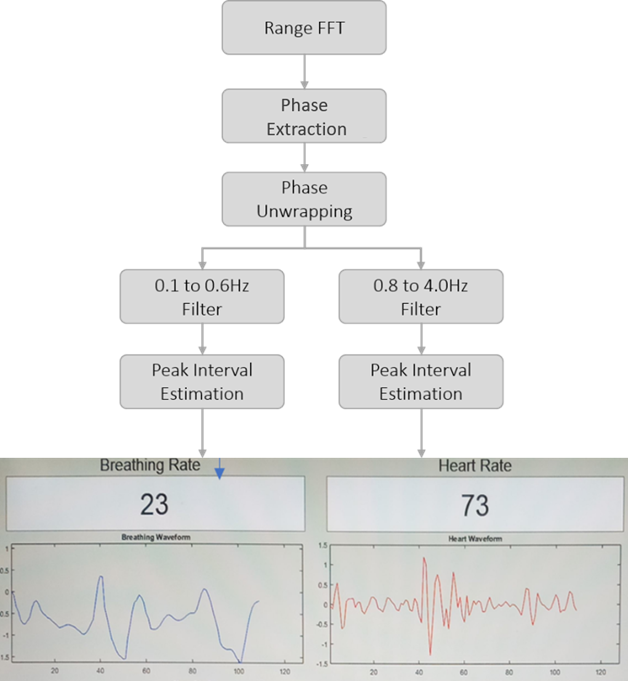

Figure 6: HR and BR detection setup Figure 7: HR and BR detection Algorithm

Figure 7: HR and BR detection Algorithm According to a study conducted by IEEE, by 2040, three of every four vehicles will be autonomous. While most of the major players’ focus is on Autonomous passenger vehicles or semi-autonomous vehicles, there is a huge void in utilizing the relevant technologies for security and surveillance applications.

According to a study conducted by IEEE, by 2040, three of every four vehicles will be autonomous. While most of the major players’ focus is on Autonomous passenger vehicles or semi-autonomous vehicles, there is a huge void in utilizing the relevant technologies for security and surveillance applications. ROS: Robot Operating System (ROS) is a flexible, opensource platform for developing Robot software. It provides several tools and support for various sensors, algorithms, visualization and simulation to develop a robust software. ROS allows developers to reuse various modules and build application use cases on Python, C++ and Java.

ROS: Robot Operating System (ROS) is a flexible, opensource platform for developing Robot software. It provides several tools and support for various sensors, algorithms, visualization and simulation to develop a robust software. ROS allows developers to reuse various modules and build application use cases on Python, C++ and Java. LAMP: LAMP represents Linux, Apache, MySQL and PHP/Python – the four opensource components. LAMP is a reliable platform for developing an Autonomous vehicle web application. LAMP makes the developer’s life easy by minimising the programming efforts that a complex autonomous platform or a robot ask for.

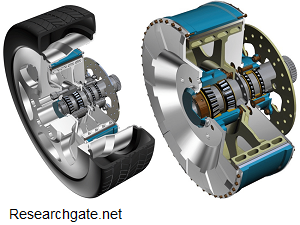

LAMP: LAMP represents Linux, Apache, MySQL and PHP/Python – the four opensource components. LAMP is a reliable platform for developing an Autonomous vehicle web application. LAMP makes the developer’s life easy by minimising the programming efforts that a complex autonomous platform or a robot ask for. Wheel Hub Motor: Hub Motors power each wheel and provide thrust and launch power to the vehicle. Integrating powerful Hub Motors to an autonomous vehicle make it efficient and capable for tough and demanding environments.

Wheel Hub Motor: Hub Motors power each wheel and provide thrust and launch power to the vehicle. Integrating powerful Hub Motors to an autonomous vehicle make it efficient and capable for tough and demanding environments. mmWave Radar: Radars provide crucial data for safe and reliable autonomous vehicle operations such as obstacle detection, proximity warnings and collision avoidance, lane departure warnings and adaptive cruise control, among others. One big advantage of Radars over other sensors is that they work accurately in any weather condition – viz., rainy, cloudy, foggy or dusty, or low-light, etc. In the recent past, 77GHz Radar Modules have been gaining popularity as they generate better object resolution and greater accuracy in velocity measurement. These modules come in ultra-compact form factor and provides superior processing power.

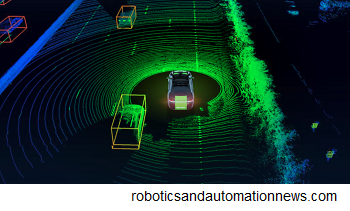

mmWave Radar: Radars provide crucial data for safe and reliable autonomous vehicle operations such as obstacle detection, proximity warnings and collision avoidance, lane departure warnings and adaptive cruise control, among others. One big advantage of Radars over other sensors is that they work accurately in any weather condition – viz., rainy, cloudy, foggy or dusty, or low-light, etc. In the recent past, 77GHz Radar Modules have been gaining popularity as they generate better object resolution and greater accuracy in velocity measurement. These modules come in ultra-compact form factor and provides superior processing power. LiDAR: LiDAR helps in generating high-resolution 3-D maps of roads or target objects with detailed information of road features, vehicles, and other obstacles in the terrain. Using a LiDAR provides quick information of the objects and helps the autonomous vehicle to build a better perception of the surroundings.

LiDAR: LiDAR helps in generating high-resolution 3-D maps of roads or target objects with detailed information of road features, vehicles, and other obstacles in the terrain. Using a LiDAR provides quick information of the objects and helps the autonomous vehicle to build a better perception of the surroundings. Identifying the surveillance payload needs is critical to an Autonomous Surveillance vehicle. Based on the system requirement one can consider IR Cameras, PTZ cameras or a 3600 a bird-eye view cameras to survey the surroundings and communication systems, communication network, control consoles, etc. for command and control.

Identifying the surveillance payload needs is critical to an Autonomous Surveillance vehicle. Based on the system requirement one can consider IR Cameras, PTZ cameras or a 3600 a bird-eye view cameras to survey the surroundings and communication systems, communication network, control consoles, etc. for command and control.

and automatically. The availability of a large set of video data and computational resources have enabled the respective DNN models to be trained effectively. Here are some of the VCA uses cases for Traffic & Road Safety:

and automatically. The availability of a large set of video data and computational resources have enabled the respective DNN models to be trained effectively. Here are some of the VCA uses cases for Traffic & Road Safety: AI-based deep learning can also help in solving crimes if captured on CCTV cameras. Machine learning techniques can be used for colour conversion, regeneration, and comparison between two video backgrounds, which will help forensic teams to identify vehicles or objects during the post-incident investigation.

AI-based deep learning can also help in solving crimes if captured on CCTV cameras. Machine learning techniques can be used for colour conversion, regeneration, and comparison between two video backgrounds, which will help forensic teams to identify vehicles or objects during the post-incident investigation.

situations that are expected to have a long-term impact across the globe. To avoid the spread of the infection, authorities across the globe have limited mass gatherings in public areas. However, given the fact that we have to live with coronavirus, people will congregate for personal reasons and it is difficult to manage them using traditional surveillance methods.

situations that are expected to have a long-term impact across the globe. To avoid the spread of the infection, authorities across the globe have limited mass gatherings in public areas. However, given the fact that we have to live with coronavirus, people will congregate for personal reasons and it is difficult to manage them using traditional surveillance methods. human-to-human contact has to be minimized. To support the situation, Drones and UAVs are proving to be a valuable tool when delivering medicines and other essential items such as household goods, groceries, medicines, first-aid kit and also food to the local people who are either in red zones or restricted containment areas.

human-to-human contact has to be minimized. To support the situation, Drones and UAVs are proving to be a valuable tool when delivering medicines and other essential items such as household goods, groceries, medicines, first-aid kit and also food to the local people who are either in red zones or restricted containment areas. our day-to-day lives in one form or the other, more often through the smart gadgets we use.

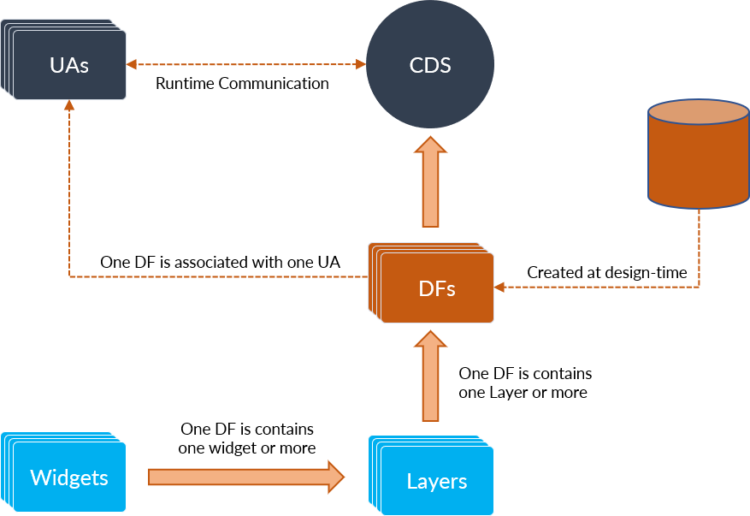

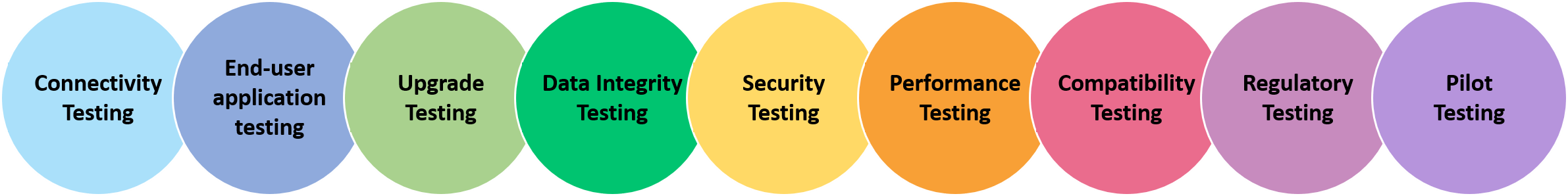

our day-to-day lives in one form or the other, more often through the smart gadgets we use. Four key factors call for a developer’s attention when we talk about IoT App – The IoT device by itself, the data ingestion layer, analytics and finally the end-user. The data generated by the IoT devices are transmitted over a wireless or wired interface, processed and analyzed before being displayed at the user end. The data is presented in an easy to understand format, enabling the user to monitor, control and analyze the data and generate reports using an intuitively designed interface, which we call an IOT App or Cloud App based on the use case. An IoT app developer has to pay in-depth attention to various critical factors such as cross-device compatibility, interoperability, cloud integration, connectivity, scalability, data security, privacy and various standards & regulations. The developer should have expertise on a range of tools and techniques to develop reliable and robust IoT / Cloud Applications. Some of the tools for Embedded Applications Development or IoT Applications are:

Four key factors call for a developer’s attention when we talk about IoT App – The IoT device by itself, the data ingestion layer, analytics and finally the end-user. The data generated by the IoT devices are transmitted over a wireless or wired interface, processed and analyzed before being displayed at the user end. The data is presented in an easy to understand format, enabling the user to monitor, control and analyze the data and generate reports using an intuitively designed interface, which we call an IOT App or Cloud App based on the use case. An IoT app developer has to pay in-depth attention to various critical factors such as cross-device compatibility, interoperability, cloud integration, connectivity, scalability, data security, privacy and various standards & regulations. The developer should have expertise on a range of tools and techniques to develop reliable and robust IoT / Cloud Applications. Some of the tools for Embedded Applications Development or IoT Applications are: communicate with

communicate with  such as Factory Automation, Oil and Gas, Mining and Industrial safety among others, to monitor and control complex systems and processes. Industrial Apps are integral to Industrial control systems, providing real-time analytics and intelligence to users, optimize production operation and thereby enhance productivity. These Embedded Applications can be implemented on various platforms including Industrial PCs, Tabs and Smartphones.

such as Factory Automation, Oil and Gas, Mining and Industrial safety among others, to monitor and control complex systems and processes. Industrial Apps are integral to Industrial control systems, providing real-time analytics and intelligence to users, optimize production operation and thereby enhance productivity. These Embedded Applications can be implemented on various platforms including Industrial PCs, Tabs and Smartphones. has all the required functionalities and features, it’s the UI that defines the user experience. Any medical/scientific product, be it a large system or a handheld device, demand an intuitive user interface, seamless user experience, feature-rich UI/UX controls and ergonomic design. Medical / Scientific Apps present complex data in a simplified and user-friendly format and help the users solve complex analytical challenges quickly and easily. Some of the popular tools used for the implementation of scientific apps are,

has all the required functionalities and features, it’s the UI that defines the user experience. Any medical/scientific product, be it a large system or a handheld device, demand an intuitive user interface, seamless user experience, feature-rich UI/UX controls and ergonomic design. Medical / Scientific Apps present complex data in a simplified and user-friendly format and help the users solve complex analytical challenges quickly and easily. Some of the popular tools used for the implementation of scientific apps are, control and diagnostics, HMI offers a safe and reliable interface for various complex industrial applications. Today, HMI is imperative to Automation applications as it forms the data foundation of the system. An HMI development activity calls attention towards three key things – a robust Graphical User Interface, memory optimization and power efficiency. Factors such as shrinking device size and increasing functional complexity are posing many challenges in creating intuitive, user-friendly designs. In addition, the spurt of graphics technologies across embedded applications is making a huge difference in our outlook towards HMI – as a user and a designer alike. HMI functions as a gateway between a multitude of hardware & software components in an Embedded System, which includes Hardware modules, I/O devices, controllers, servers, etc. For instance, in an industrial scenario, robotics controls in complex machines are enabled and managed through HMIs. Some of the popular tools used are Microsoft’s Visual Studio .Net, Qt/QML, Android, ReactNative, etc.

control and diagnostics, HMI offers a safe and reliable interface for various complex industrial applications. Today, HMI is imperative to Automation applications as it forms the data foundation of the system. An HMI development activity calls attention towards three key things – a robust Graphical User Interface, memory optimization and power efficiency. Factors such as shrinking device size and increasing functional complexity are posing many challenges in creating intuitive, user-friendly designs. In addition, the spurt of graphics technologies across embedded applications is making a huge difference in our outlook towards HMI – as a user and a designer alike. HMI functions as a gateway between a multitude of hardware & software components in an Embedded System, which includes Hardware modules, I/O devices, controllers, servers, etc. For instance, in an industrial scenario, robotics controls in complex machines are enabled and managed through HMIs. Some of the popular tools used are Microsoft’s Visual Studio .Net, Qt/QML, Android, ReactNative, etc. In most cases, it complements a PC or Web Application, by enabling remote access to the embedded system. Embedded mobile apps are widely used in Industrial, Healthcare and Automotive industries. One of the primary concerns of Embedded Mobile Applications is security since these applications handle critical and confidential data over the internet. Implementation of a foolproof, secured App platform is critical to avoid any kind of data breach. Mobile devices are available on a wide range of processors and operating systems. Thus, the Application created should work seamlessly irrespective of the platform it runs.

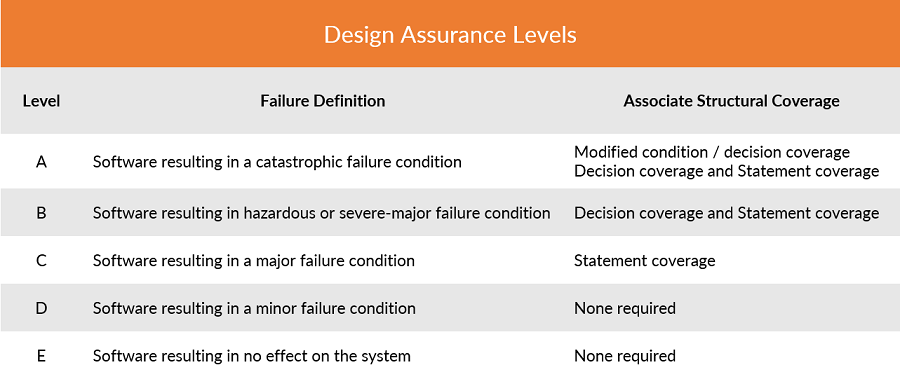

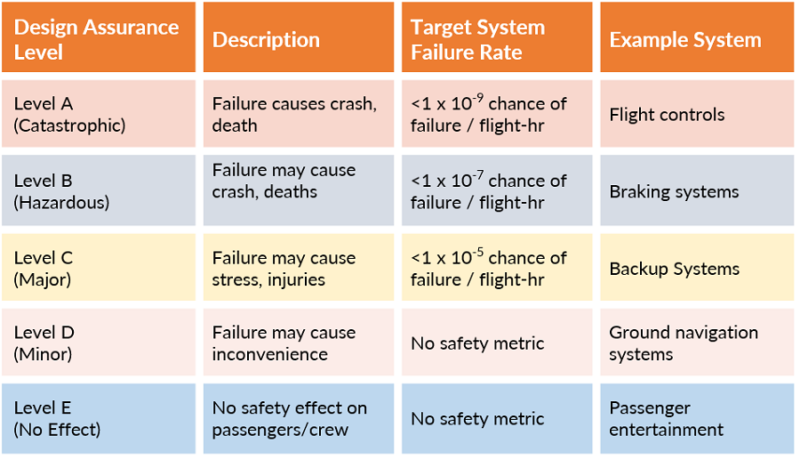

In most cases, it complements a PC or Web Application, by enabling remote access to the embedded system. Embedded mobile apps are widely used in Industrial, Healthcare and Automotive industries. One of the primary concerns of Embedded Mobile Applications is security since these applications handle critical and confidential data over the internet. Implementation of a foolproof, secured App platform is critical to avoid any kind of data breach. Mobile devices are available on a wide range of processors and operating systems. Thus, the Application created should work seamlessly irrespective of the platform it runs. sequential instructions executed directly on the system hardware – commonly on microprocessors or microcontrollers – and runs without an OS. Bare-metal applications are faster, are power efficient and use less memory. Due to these characteristics, bare-metal apps are widely used in time-critical, low latency applications that has stringent boot time but minimal CPU bandwidth, connectivity and memory; for example, DO-178 compliant applications for mission-critical and safety-critical systems. Headless Apps find its use in Embedded Apps Development for wearable, medical, home automation, industrial, health and wellness devices, wherein a user interface is not required for executing the functionalities. Some of the popular tools used for Embedded Applications Development for Bare-metal and Headless Apps include,

sequential instructions executed directly on the system hardware – commonly on microprocessors or microcontrollers – and runs without an OS. Bare-metal applications are faster, are power efficient and use less memory. Due to these characteristics, bare-metal apps are widely used in time-critical, low latency applications that has stringent boot time but minimal CPU bandwidth, connectivity and memory; for example, DO-178 compliant applications for mission-critical and safety-critical systems. Headless Apps find its use in Embedded Apps Development for wearable, medical, home automation, industrial, health and wellness devices, wherein a user interface is not required for executing the functionalities. Some of the popular tools used for Embedded Applications Development for Bare-metal and Headless Apps include, devices is enormous.

devices is enormous.

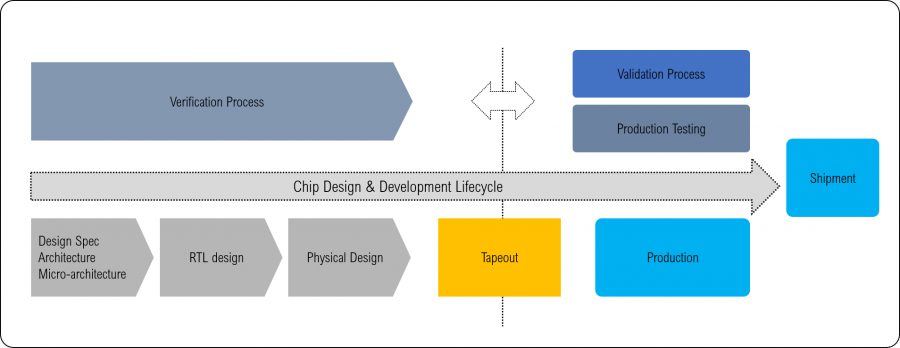

Fig: Typical Chip Manufacturing Flow

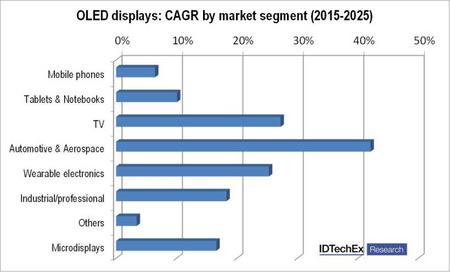

Fig: Typical Chip Manufacturing Flow Throughout the early to mid-1960s, some in-flight movies were played back from videotape, using early compact transistorized videotape recorders, and played back on CRT monitors. These bulk-head displays in In-Flight Entertainment systems were commonly placed in the aisles above the passenger seats after every few rows. More than twenty monitors were installed in an aircraft, and passengers viewed movies on this unique closed-circuit system. This was an era far before the days of solid-state circuit boards and light-weight systems. In the late 1970s and early 1980s, CRT-based projectors began to appear on newer wide body aircraft, such as the Boeing 767. Some airlines upgraded the old film In-flight Entertainment solutions to the CRT-based systems in the late 1980s and early 1990s on some of their older wide bodies. In 1988, the Airvision company introduced the first in-seat audio/video on-demand systems using 2.7 inches LCD technology which were run by Northwest Airlines on its Boeing 747 fleet. It received overwhelmingly positive passenger reaction and as a result, it completely replaced the CRT technology.

Throughout the early to mid-1960s, some in-flight movies were played back from videotape, using early compact transistorized videotape recorders, and played back on CRT monitors. These bulk-head displays in In-Flight Entertainment systems were commonly placed in the aisles above the passenger seats after every few rows. More than twenty monitors were installed in an aircraft, and passengers viewed movies on this unique closed-circuit system. This was an era far before the days of solid-state circuit boards and light-weight systems. In the late 1970s and early 1980s, CRT-based projectors began to appear on newer wide body aircraft, such as the Boeing 767. Some airlines upgraded the old film In-flight Entertainment solutions to the CRT-based systems in the late 1980s and early 1990s on some of their older wide bodies. In 1988, the Airvision company introduced the first in-seat audio/video on-demand systems using 2.7 inches LCD technology which were run by Northwest Airlines on its Boeing 747 fleet. It received overwhelmingly positive passenger reaction and as a result, it completely replaced the CRT technology. Wireless IFE is a wireless content distribution system to passengers’ devices. The Wireless IFE systems are light-weight compares to the traditional conventional In-flight Entertainment Solutions. Most passengers favor their own devices for inflight entertainment.

Wireless IFE is a wireless content distribution system to passengers’ devices. The Wireless IFE systems are light-weight compares to the traditional conventional In-flight Entertainment Solutions. Most passengers favor their own devices for inflight entertainment. and highly efficient wearable devices are helping people to maintain their daily routines, keep track of their health and be more aware of their health.

and highly efficient wearable devices are helping people to maintain their daily routines, keep track of their health and be more aware of their health. both wired and wireless are making the life of patients, especially the elderly and physically challenged, easier by allowing them to consult and get prescriptions from doctors on their smartphones. The doctors can also remotely monitor the patient’s health and make diagnosis and treatment decisions quickly.

both wired and wireless are making the life of patients, especially the elderly and physically challenged, easier by allowing them to consult and get prescriptions from doctors on their smartphones. The doctors can also remotely monitor the patient’s health and make diagnosis and treatment decisions quickly. instruments and devices that are used for diagnosis, therapy, research, surgery, monitoring & analysis of the patient’s health. Medical Electronics is a perfect amalgamation of embedded systems, software applications and medical science to improve healthcare services. With embedded technology, the physicians can obtain the medical reports of the patient instantly, view them on embedded software-driven electronic devices, monitor the patient, and give consultation remotely without any hassle.

instruments and devices that are used for diagnosis, therapy, research, surgery, monitoring & analysis of the patient’s health. Medical Electronics is a perfect amalgamation of embedded systems, software applications and medical science to improve healthcare services. With embedded technology, the physicians can obtain the medical reports of the patient instantly, view them on embedded software-driven electronic devices, monitor the patient, and give consultation remotely without any hassle. ECG signal, complete data, analysis as well as comprehensive reporting of the patient’s condition. The electrodes are placed at various points on the patient’s torso and the sensor detects the electrical activity of the heart, records the electrical signals, and displays the comprehensive data on the digital screen. There are various types of ECG machines right from the large 12 Lead ECG machine to handheld, wireless ECG. Medical device design and development like wireless ECG, enable the result to be shared with the doctor for supervising the heart rate variability of a patient, remotely.

ECG signal, complete data, analysis as well as comprehensive reporting of the patient’s condition. The electrodes are placed at various points on the patient’s torso and the sensor detects the electrical activity of the heart, records the electrical signals, and displays the comprehensive data on the digital screen. There are various types of ECG machines right from the large 12 Lead ECG machine to handheld, wireless ECG. Medical device design and development like wireless ECG, enable the result to be shared with the doctor for supervising the heart rate variability of a patient, remotely.

BP monitors can either be placed

BP monitors can either be placed

These ARM processors were further enhanced to provide high-performance and efficient power management without disrupting the system’s overall efficiency. Microprocessors are astounding devices. They integrate the brain of a computer onto a single electronic component. The computing power that once required a room full of equipment now fits onto a razor-thin slice of silicon. Due to their compact size, microprocessors are now widely used in the silicon industry to design electronics products with the processor as the core of the system. Processors are categorized based on their internal architecture.

These ARM processors were further enhanced to provide high-performance and efficient power management without disrupting the system’s overall efficiency. Microprocessors are astounding devices. They integrate the brain of a computer onto a single electronic component. The computing power that once required a room full of equipment now fits onto a razor-thin slice of silicon. Due to their compact size, microprocessors are now widely used in the silicon industry to design electronics products with the processor as the core of the system. Processors are categorized based on their internal architecture.

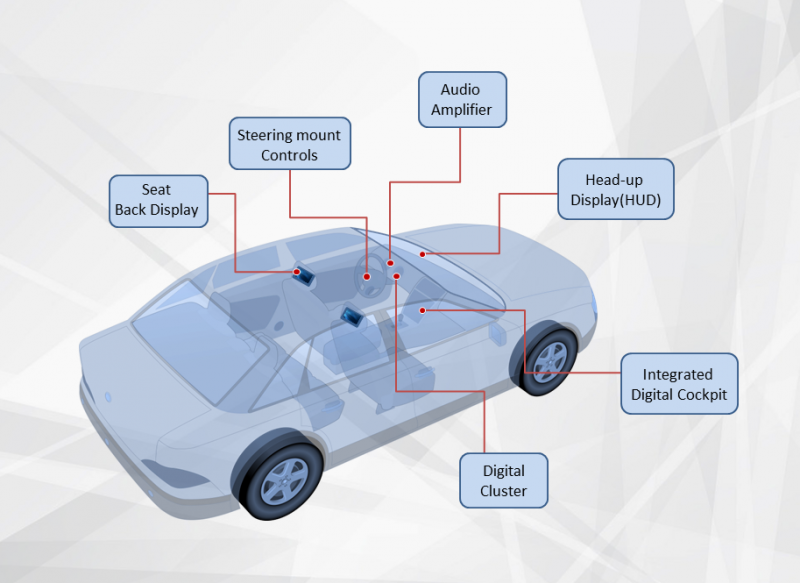

Key features of Automotive Infotainment Systems

Key features of Automotive Infotainment Systems most sought-after operating system for smartphone, tablet and other smart devices. In May 2019, the number of active Android devices crossed 2.5 Billion and that speaks volume about the popularity and acceptance the Linux based open-source platform has received over a decade. Today, Android and Android HAL hold about 85% of the global mobile operating system market. The latest version of the Android OS, 9.0 Pie is AI enabled for better efficiency and better user experience. It is designed to enhance user experience, making it more intuitive and user-friendly. A few of the worth citing new features are adaptive battery and adaptive brightness. The latest in Android HAL Development also enables you to switch between apps using gestures. In Android 9.0 and higher, the lower-level layers are re-written to adopt a new, more modular architecture. Devices running Android 8.0 and higher must support Android HAL written in HIDL, with a few exceptions.

most sought-after operating system for smartphone, tablet and other smart devices. In May 2019, the number of active Android devices crossed 2.5 Billion and that speaks volume about the popularity and acceptance the Linux based open-source platform has received over a decade. Today, Android and Android HAL hold about 85% of the global mobile operating system market. The latest version of the Android OS, 9.0 Pie is AI enabled for better efficiency and better user experience. It is designed to enhance user experience, making it more intuitive and user-friendly. A few of the worth citing new features are adaptive battery and adaptive brightness. The latest in Android HAL Development also enables you to switch between apps using gestures. In Android 9.0 and higher, the lower-level layers are re-written to adopt a new, more modular architecture. Devices running Android 8.0 and higher must support Android HAL written in HIDL, with a few exceptions.

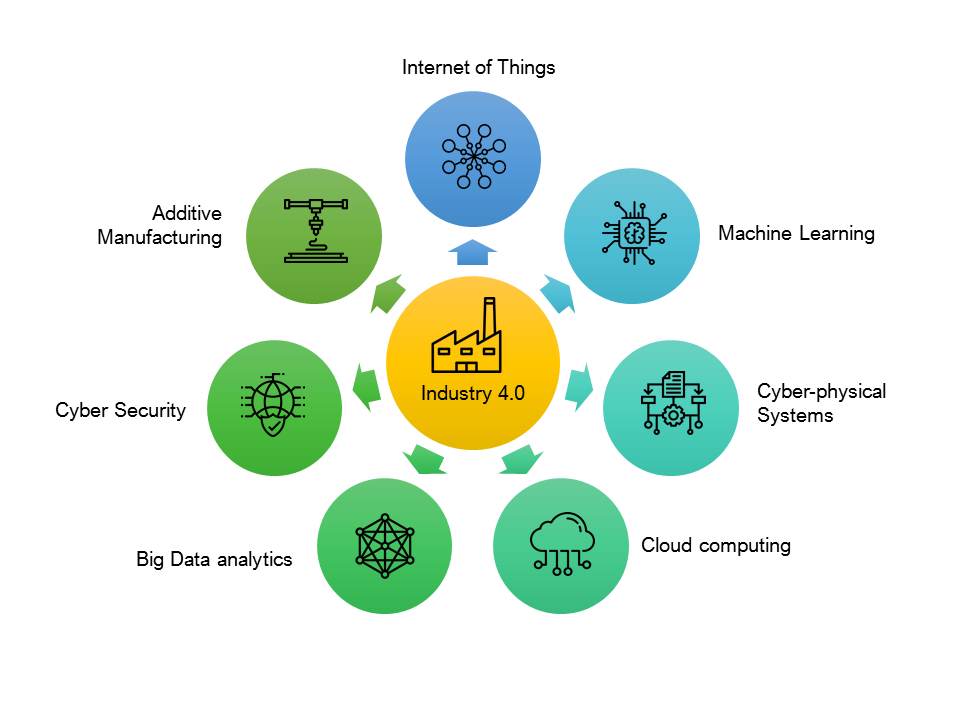

Broadly, Internet of Things can be classified into Consumer IoT (CIOT)) and Industrial or Enterprise IoT (IIoT). The key difference between CIoT and IIoT mainly lies in the type of devices, application and the technologies that power them.

Broadly, Internet of Things can be classified into Consumer IoT (CIOT)) and Industrial or Enterprise IoT (IIoT). The key difference between CIoT and IIoT mainly lies in the type of devices, application and the technologies that power them.

Electronics based Assistive Technology devices

Electronics based Assistive Technology devices

The earliest examples of wearable electronics technology and related Wearable Electronics Application Development are the spectacles and pocket watches that were invented centuries ago. With the advancement in technology, I

The earliest examples of wearable electronics technology and related Wearable Electronics Application Development are the spectacles and pocket watches that were invented centuries ago. With the advancement in technology, I

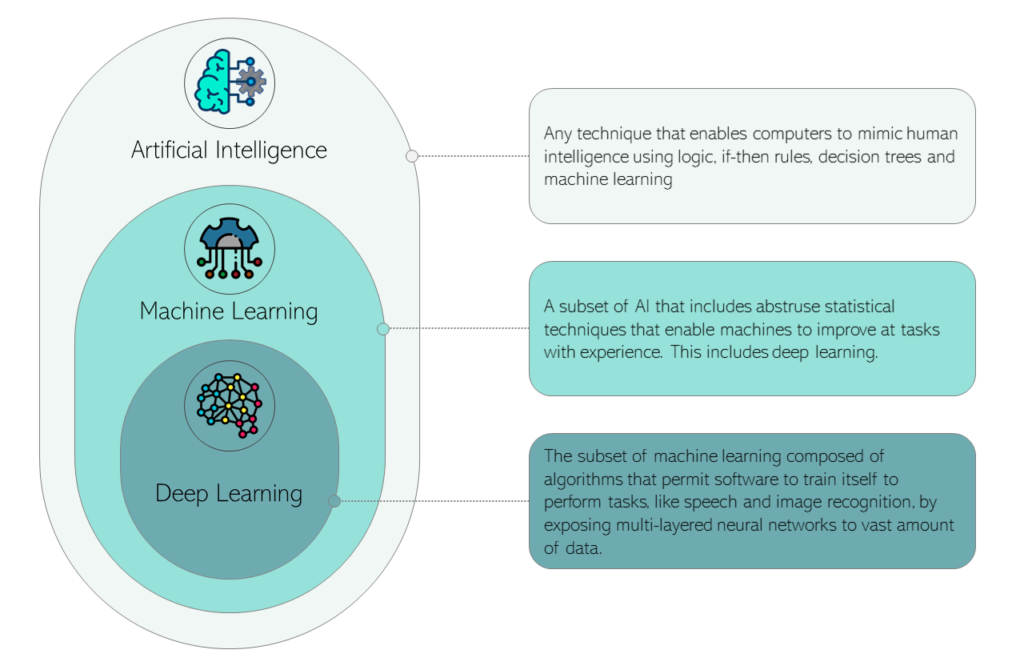

Artificial Intelligence is a broad concept of machines that can carry out tasks in a smart and intelligent way, emulating humans. Machine learning is an application of artificial intelligence (AI) that enables these machines to automatically learn and improve from experience without being explicitly programmed. Machine learning focuses on development of programs that can access data and use it to learn on their own. Deep learning is a subset of machine learning. Deep learning usually refers to deep artificial neural networks, and sometimes to deep reinforcement learning.